Complete Features

These features were completed when this image was assembled

This outcome tracks the overall CoreOS Layering story as well as the technical items needed to converge CoreOS with RHEL image mode. This will provide operational consistency across the platforms.

ROADMAP for this Outcome: https://docs.google.com/document/d/1K5uwO1NWX_iS_la_fLAFJs_UtyERG32tdt-hLQM8Ow8/edit?usp=sharing

This work describes the tech preview state of On Cluster Builds. Major interfaces should be agreed upon at the end of this state.

In their current state, the BuildController unit test suite sometimes fails unexpectedly. This causes loss of confidence in the MCO unit test suite and can block PRs from merging; even when the changes the PR introduces are unrelated to BuildController. I suspect there is a race condition within the test suite, which combined with the test suite itself being aggressively parallel, causes the test suite to fail unexpectedly.

Done When:

- The BuildController unit test suite has been audited and refactored to remove this race condition.

Description of problem:

In an on-cluster build pool, when we create a MC to update the sshkeys, we can't find the new keys in the nodes after the configuration is built and applied.

Version-Release number of selected component (if applicable):

$ oc get clusterversion NAME VERSION AVAILABLE PROGRESSING SINCE STATUS version 4.14.0-0.nightly-2023-08-30-191617 True False 7h52m Cluster version is 4.14.0-0.nightly-2023-08-30-191617

How reproducible:

Always

Steps to Reproduce:

1. Enable the on-cluster build functionality in the "worker" pool

2. Check the value of the current keys

$ oc debug node/$(oc get nodes -l node-role.kubernetes.io/worker -ojsonpath="{.items[0].metadata.name}") -- chroot /host cat /home/core/.ssh/authorized_keys.d/ignition

Warning: metadata.name: this is used in the Pod's hostname, which can result in surprising behavior; a DNS label is recommended: [must be no more than 63 characters]

Starting pod/sregidor-sr3-bfxxj-worker-a-h5b5jcopenshift-qeinternal-debug-ljxgx ...

To use host binaries, run `chroot /host`

ssh-rsa AAAA..................................................................................................................................................................qe@redhat.com

Removing debug pod ...

3. Create a new MC to configure the "core" user's sshkeys. We add 2 extra keys.

$ oc get mc -o yaml tc-59426-add-ssh-key-9tv2owyp

apiVersion: machineconfiguration.openshift.io/v1

kind: MachineConfig

metadata:

creationTimestamp: "2023-09-01T10:57:14Z"

generation: 1

labels:

machineconfiguration.openshift.io/role: worker

name: tc-59426-add-ssh-key-9tv2owyp

resourceVersion: "135885"

uid: 3cf31fbb-7a4e-472d-8430-0c0eb49420fc

spec:

config:

ignition:

version: 3.2.0

passwd:

users:

- name: core

sshAuthorizedKeys:

- ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQDPmGf/sfIYog......

mco_test@redhat.com

- ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQDf.......

mco_test2@redhat.com

3. Verify that the new rendered MC contains the 3 keys

$ oc get mcp worker

NAME CONFIG UPDATED UPDATING DEGRADED MACHINECOUNT READYMACHINECOUNT UPDATEDMACHINECOUNT DEGRADEDMACHINECOUNT AGE

worker rendered-worker-02d04d7c47cd3e08f8f305541cf85000 True False False 2 2 2 0 8h

$ oc get mc -o yaml rendered-worker-02d04d7c47cd3e08f8f305541cf85000 | grep users -A9

users:

- name: core

sshAuthorizedKeys:

- ssh-rsa AAAAB...............................qe@redhat.com

- ssh-rsa AAAAB...............................mco_test@redhat.com

- ssh-rsa AAAAB...............................mco_test2@redhat.com

storage:

Actual results:

Only the initial key is present in the node

$ oc debug node/$(oc get nodes -l node-role.kubernetes.io/worker -ojsonpath="{.items[0].metadata.name}") -- chroot /host cat /home/core/.ssh/authorized_keys.d/ignition

Warning: metadata.name: this is used in the Pod's hostname, which can result in surprising behavior; a DNS label is recommended: [must be no more than 63 characters]

Starting pod/sregidor-sr3-bfxxj-worker-a-h5b5jcopenshift-qeinternal-debug-ljxgx ...

To use host binaries, run `chroot /host`

ssh-rsa AAAA.........qe@redhat.com

Removing debug pod ...

Expected results:

The added ssh keys should be configure in /home/core/.ssh/authorized_keys.d/ignition file as well.

Additional info:

Description of problem:

In pools with On-Cluster Build enabled. When a config drift happens because a file's content has been manually changed the MCP goes degraded (this is expected).

- lastTransitionTime: "2023-08-31T11:34:33Z"

message: 'Node sregidor-sr2-2gb5z-worker-a-7tpjd.c.openshift-qe.internal is reporting:

"unexpected on-disk state validating against quay.io/xxx/xxx@sha256:........................:

content mismatch for file \"/etc/mco-test-file\""'

reason: 1 nodes are reporting degraded status on sync

status: "True"

type: NodeDegraded

If we fix this drift and we restore the original file's content, the MCP becomes degraded with this message:

- lastTransitionTime: "2023-08-31T12:24:47Z"

message: 'Node sregidor-sr2-2gb5z-worker-a-q7wcb.c.openshift-qe.internal is

reporting: "failed to update OS to quay.io/xxx/xxx@sha256:.......

: error running rpm-ostree rebase --experimental ostree-unverified-registry:quay.io/xxx/xxx@sha256:........:

error: Old and new refs are equal: ostree-unverified-registry:quay.io/xxx/xxx@sha256:..............\n:

exit status 1"'

reason: 1 nodes are reporting degraded status on sync

status: "True"

type: NodeDegraded

Version-Release number of selected component (if applicable):

$ oc get clusterversion NAME VERSION AVAILABLE PROGRESSING SINCE STATUS version 4.14.0-0.nightly-2023-08-30-191617 True False 4h18m Error while reconciling 4.14.0-0.nightly-2023-08-30-191617: the cluster operator monitoring is not available

How reproducible:

Always

Steps to Reproduce:

1. Enable the OCB functionality for worker pool

$ oc label mcp/worker machineconfiguration.openshift.io/layering-enabled=

(Create the necessary cms and secrets for the OCB functionality to work fine)

wait until the new image is created and the nodes are updated

2. Create a MC to deploy a new file

apiVersion: machineconfiguration.openshift.io/v1

kind: MachineConfig

metadata:

labels:

machineconfiguration.openshift.io/role: worker

name: mco-drift-test-file

spec:

config:

ignition:

version: 3.2.0

storage:

files:

- contents:

source: data:,MCO%20test%20file%0A

path: /etc/mco-test-file

wait until the new MC is deployed

3. Modify the content of the file /etc/mco-test-file making a backup first

$ oc debug node/$(oc get nodes -l node-role.kubernetes.io/worker -ojsonpath="{.items[0].metadata.name}")

chrWarning: metadata.name: this is used in the Pod's hostname, which can result in surprising behavior; a DNS label is recommended: [must be no more than 63 characters]

Starting pod/sregidor-sr2-2gb5z-worker-a-q7wcbcopenshift-qeinternal-debug-sv85v ...

To use host binaries, run `chroot /host`

oot /host

cd /etc

Pod IP: 10.0.128.9

If you don't see a command prompt, try pressing enter.

sh-4.4# chroot /host

sh-5.1# cd /etc

sh-5.1# cat mco-test-file

MCO test file

sh-5.1# cp mco-test-file mco-test-file-back

sh-5.1# echo -n "1" >> mco-test-file

4. wait until the MCP reports the config drift issue

$ oc get mcp worker -o yaml

....

- lastTransitionTime: "2023-08-31T11:34:33Z"

message: 'Node sregidor-sr2-2gb5z-worker-a-7tpjd.c.openshift-qe.internal is reporting:

"unexpected on-disk state validating against quay.io/xxx/xxx@sha256:........................:

content mismatch for file \"/etc/mco-test-file\""'

reason: 1 nodes are reporting degraded status on sync

status: "True"

type: NodeDegraded

5. Restore the backup that we made in step 3

sh-5.1# cp mco-test-file-back mco-test-file

Actual results:

The worker pool is degraded with this message

- lastTransitionTime: "2023-08-31T12:24:47Z"

message: 'Node sregidor-sr2-2gb5z-worker-a-q7wcb.c.openshift-qe.internal is

reporting: "failed to update OS to quay.io/xxx/xxx@sha256:.......

: error running rpm-ostree rebase --experimental ostree-unverified-registry:quay.io/xxx/xxx@sha256:........:

error: Old and new refs are equal: ostree-unverified-registry:quay.io/xxx/xxx@sha256:..............\n:

exit status 1"'

reason: 1 nodes are reporting degraded status on sync

status: "True"

type: NodeDegraded

Expected results:

The node pool should stop being degraded.

Additional info:

There is a link to the must-gather file in the first comment of this issue.

Description of problem:

In OCB pools, when we create a MC to configure a password for the "core" user the password is not configured.

Version-Release number of selected component (if applicable):

$ oc get clusterversion NAME VERSION AVAILABLE PROGRESSING SINCE STATUS version 4.14.0-0.nightly-2023-08-30-191617 True False 5h38m Cluster version is 4.14.0-0.nightly-2023-08-30-191617

How reproducible:

Alwasy

Steps to Reproduce:

1. Enable on-cluster build on "worker" pool. 2. Create a MC to configure the "core" user password apiVersion: machineconfiguration.openshift.io/v1 kind: MachineConfig metadata: creationTimestamp: "2023-09-01T09:51:14Z" generation: 1 labels: machineconfiguration.openshift.io/role: worker name: tc-59417-test-core-passwd-tx2ndvcd resourceVersion: "105610" uid: 1f7a4de1-6222-4153-a46c-d1a17e5f89b1 spec: config: ignition: version: 3.2.0 passwd: users: - name: core passwordHash: $6$uim4LuKWqiko1l5K$QJUwg.4lAyU4egsM7FNaNlSbuI6JfQCRufb99QuF082BpbqFoHP3WsWdZ5jCypS0veXWN1HDqO.bxUpE9aWYI1 # password coretest 3. Wait for the configuration to be built and applied

Actual results:

The password is not configured for the core user In a worker node: We can't login using the new password $ oc debug node/sregidor-sr3-bfxxj-worker-a-h5b5j.c.openshift-qe.internal Warning: metadata.name: this is used in the Pod's hostname, which can result in surprising behavior; a DNS label is recommended: [must be no more than 63 characters] Starting pod/sregidor-sr3-bfxxj-worker-a-h5b5jcopenshift-qeinternal-debug-cb2gh ... To use host binaries, run `chroot /host` chPod IP: 10.0.128.2 If you don't see a command prompt, try pressing enter. sh-4.4# chroot /host sh-5.1# su core [core@sregidor-sr3-bfxxj-worker-a-h5b5j /]$ su core Password: su: Authentication failure The password is not configured: sh-5.1# cat /etc/shadow |grep core systemd-coredump:!!::::::: core:*:19597:0:99999:7:::

Expected results:

The password should be configured and we should be able to login to the nodes using the user "core" and the configured password.

Additional info:

Note: phase 2 target is tech preview.

Feature Overview

In the initial delivery of CoreOS Layering, it is required that administrators provide their own build environment to customize RHCOS images. That could be a traditional RHEL environment or potentially an enterprising administrator with some knowledge of OCP Builds could set theirs up on-cluster.

The primary virtue of an on-cluster build path is to continue using the cluster to manage the cluster. No external dependency, batteries-included.

On-cluster, automated RHCOS Layering builds are important for multiple reasons:

- One-click/one-command upgrades of OCP are very popular. Many customers may want to make one or just a few customizations but also want to keep that simplified upgrade experience.

- Customers who only need to customize RHCOS temporarily (hotfix, driver test package, etc) will find off-cluster builds to be too much friction for one driver.

- One of OCP's virtues is that the platform and OS are developed, tested, and versioned together. Off-cluster building breaks that connection and leaves it up to the user to keep the OS up-to-date with the platform containers. We must make it easy for customers to add what they need and keep the OS image matched to the platform containers.

Goals & Requirements

- The goal of this feature is primarily to bring the 4.14 progress (

OCPSTRAT-35) to a Tech Preview or GA level of support. - Customers should be able to specify a Containerfile with their customizations and "forget it" as long as the automated builds succeed. If they fail, the admin should be alerted and pointed to the logs from the failed build.

- The admin should then be able to correct the build and resume the upgrade.

- Intersect with the Custom Boot Images such that a required custom software component can be present on every boot of every node throughout the installation process including the bootstrap node sequence (example: out-of-box storage driver needed for root disk).

- Users can return a pool to an unmodified image easily.

- RHEL entitlements should be wired in or at least simple to set up (once).

- Parity with current features – including the current drain/reboot suppression list, CoreOS Extensions, and config drift monitoring.

The goal of this effort is to leverage OVN Kubernetes SDN to satisfy networking requirements of both traditional and modern virtualization. This Feature describes the envisioned outcome and tracks its implementation.

Current state

In its current state, OpenShift Virtualization provides a flexible toolset allowing customers to connect VMs to the physical network. It also has limited secondary overlay network capabilities and Pod network support.

It suffers from several gaps: Topology of the default pod network is not suitable for typical VM workload - due to that we are missing out on many of the advanced capabilities of OpenShift networking, and we also don't have a good solution for public cloud. Another problem is that while we provide plenty of tools to build a network solution, we are not very good in guiding cluster administrators configuring their network, making them rely on their account team.

Desired outcome

Provide:

- Networking solution for public cloud

- Advanced SDN networking functionality such as IPAM, routed ingress, DNS and cloud-native integration

- Ability to host traditional VM workload imported from other virtualization platforms

... while maintaining networking expectations of a typical VM workload:

- Sticky IPs allowing seamless live migration

- External IP reflected inside the guest, i.e. no NAT for east-west traffic

Additionally, make our networking configuration more accessible to newcomers by providing a finite list of user stories mapped to recommended solutions.

User stories

You can find more info about this effort in https://docs.google.com/document/d/1jNr0E0YMIHsHu-aJ4uB2YjNY00L9TpzZJNWf3LxRsKY/edit

Goal

Allow administrators to create new Network Attachment Definitions for OVN Kubernetes secondary localnet networks.

Non-Requirements

- <List of things not included in this epic, to alleviate any doubt raised during the grooming process.>

Notes

- This should be a new item in the dropdown menu of NetworkAttachmentDefinition dialog. When selecting "OVN Kubernetes secondary localnet network" two additional fields should show up: "Bridge mapping", MTU (optional) and "VLAN (optional)". The "Bridge mapping" attribute should have a tooltip explaining what it is. That should render into:

apiVersion: k8s.cni.cncf.io/v1

kind: NetworkAttachmentDefinition

metadata:

name: <name>

namespace: <namespace>

spec:

config: |2

{

"cniVersion": "0.4.0",

"name": "<bridge mapping>",

"type": "ovn-k8s-cni-overlay",

"topology":"localnet",

"vlanID": <VLAN>, # set only if passed from the user

"mtu": <MTU>, # set only if passed from the user

"netAttachDefName": "<namespace>/<name>"

}

- Tooltip text: "Reference to a physical network name. A bridge mapping must be configured on cluster nodes to map between physical network names and Open vSwitch bridges."

- Draft of the localnet documentation https://github.com/openshift/openshift-docs/blob/0fe4ec0bcc31afd4ba5060fb87dc9c347da34603/modules/configuring-localnet-switched-topology.adoc#L92

Done Checklist

- DEV - Upstream code and tests merged: https://github.com/openshift/console/pull/13293

- QE - Test plan: https://polarion.engineering.redhat.com/polarion/#/project/CNV/workitem?id=CNV-10548

- QE - Test automation: https://gitlab.cee.redhat.com/cnv-qe/kubevirt-ui/-/merge_requests/539

- Feature complete including (next line)

- OVN-K

Through discussion in this issue, https://issues.redhat.com/browse/OCPBUGS-13966 we have decided port 80 can't be support in conjunction with 433 at this time for the default route ingress.

This needs to be documented for 4.14

Feature Overview (aka. Goal Summary)

As a result of Hashicorp's license change to BSL, Red Hat OpenShift needs to remove the use of Hashicorp's Terraform from the installer – specifically for OpenStack deployments which currently use Terraform for setting up the infrastructure.

To avoid an increased support overhead once the license changes at the end of the year, we want to provision OpenStack infrastructure without the use of Terraform.

Requirements (aka. Acceptance Criteria):

- The OpenStack Installer no longer contains or uses Terraform.

- The new provider should aim to provide the same results and have parity with the existing OpenStack Terraform provider. Specifically, we should aim for feature parity against the install config and the cluster it creates to minimize impact on existing customers' UX.

Use Cases (Optional):

Include use case diagrams, main success scenarios, alternative flow scenarios. Initial completion during Refinement status.

Questions to Answer (Optional):

Include a list of refinement / architectural questions that may need to be answered before coding can begin. Initial completion during Refinement status.

Out of Scope

High-level list of items that are out of scope. Initial completion during Refinement status.

Background

Provide any additional context is needed to frame the feature. Initial completion during Refinement status.

Customer Considerations

Provide any additional customer-specific considerations that must be made when designing and delivering the Feature. Initial completion during Refinement status.

Documentation Considerations

Provide information that needs to be considered and planned so that documentation will meet customer needs. If the feature extends existing functionality, provide a link to its current documentation. Initial completion during Refinement status.

Interoperability Considerations

Which other projects, including ROSA/OSD/ARO, and versions in our portfolio does this feature impact? What interoperability test scenarios should be factored by the layered products? Initial completion during Refinement status.

Goal

Move CAPO (cluster-api-provider-openstack) to a stable API.

Why is this important?

Currently OpenShift on OpenStack is using MAPO. This uses objects from the upstream CAPO project under the hood but not the APIs. We would like to start using CAPO and declare MAPO as deprecated and frozen, but before we do that upstream CAPO's own API needs to be declared stable.

Upstream CAPO's API is currently at v1alpha6. There are a number of incompatible changes already planned for the API which have prevented us from declaring it v1beta1. We should make those changes and move towards a stable API.

The changes need to be accompanied by an improvement in test coverage of API versions.

Upstream issues targeted for v1beta1 should be tracked in the v0.7 milestone: https://github.com/kubernetes-sigs/cluster-api-provider-openstack/issues?q=is%3Aopen+is%3Aissue+milestone%3Av0.7

Another option is to switch to cluster-capi-operator if it graduates, which would mean only a single API would be maintained.

Scenarios

N/A. This is purely upstream work for now. We will directly benefit from this work once we switch to CAPO in a future release.

Acceptance Criteria

Upstream CAPO provides a v1beta1 API

Upstream CAPO includes e2e tests using envtest (https://book.kubebuilder.io/reference/envtest.html) which will allow us to avoid breaks in API compatibility

Dependencies (internal and external)

None.

Previous Work (Optional):

N/A

In our way to move forward, we need to bump CAPO into MAPO from v1alpha6 to v1alpha7.

Customer has escalated the following issues where ports don't have TLS support. This Feature request lists all the components port raised by the customer.

Details here https://docs.google.com/document/d/1zB9vUGB83xlQnoM-ToLUEBtEGszQrC7u-hmhCnrhuXM/edit

Currently, we are serving the metrics as http on 9537 we need to upgrade to use TLS

Related to https://docs.google.com/document/d/1zB9vUGB83xlQnoM-ToLUEBtEGszQrC7u-hmhCnrhuXM/edit

Feature Overview (aka. Goal Summary)

As a result of Hashicorp's license change to BSL, Red Hat OpenShift needs to remove the use of Hashicorp's Terraform from the installer – specifically for IPI deployments which currently use Terraform for setting up the infrastructure.

To avoid an increased support overhead once the license changes at the end of the year, we want to provision OpenShift on the existing supported providers' infrastructure without the use of Terraform.

This feature will be used to track all the CAPI preparation work that is common for all the supported providers

Use Cases (Optional):

Include use case diagrams, main success scenarios, alternative flow scenarios. Initial completion during Refinement status.

Questions to Answer (Optional):

Include a list of refinement / architectural questions that may need to be answered before coding can begin. Initial completion during Refinement status.

Out of Scope

High-level list of items that are out of scope. Initial completion during Refinement status.

Background

Provide any additional context is needed to frame the feature. Initial completion during Refinement status.

Customer Considerations

Provide any additional customer-specific considerations that must be made when designing and delivering the Feature. Initial completion during Refinement status.

Documentation Considerations

Provide information that needs to be considered and planned so that documentation will meet customer needs. If the feature extends existing functionality, provide a link to its current documentation. Initial completion during Refinement status.

Interoperability Considerations

Which other projects, including ROSA/OSD/ARO, and versions in our portfolio does this feature impact? What interoperability test scenarios should be factored by the layered products? Initial completion during Refinement status.

Epic Goal

- Day 0 Cluster Provisioning

- Compatibility with existing workflows that do not require a container runtime on the host

Why is this important?

- This epic would maintain compatibility with existing customer workflows that do not have access to a management cluster and do not have the dependency of a container runtime

Scenarios

- openshift-install running in customer automation

Acceptance Criteria

- CI - MUST be running successfully with tests automated

- Release Technical Enablement - Provide necessary release enablement details and documents.

- ...

Dependencies (internal and external)

- ...

Previous Work (Optional):

- …

Open questions::

- …

Done Checklist

- CI - CI is running, tests are automated and merged.

- Release Enablement <link to Feature Enablement Presentation>

- DEV - Upstream code and tests merged: <link to meaningful PR or GitHub Issue>

- DEV - Upstream documentation merged: <link to meaningful PR or GitHub Issue>

- DEV - Downstream build attached to advisory: <link to errata>

- QE - Test plans in Polarion: <link or reference to Polarion>

- QE - Automated tests merged: <link or reference to automated tests>

- DOC - Downstream documentation merged: <link to meaningful PR>

PoC & design for running CAPI control plane using binaries.

As a customer, I would like to deploy OpenShift On OpenStack, using the IPI workflow where my control plane would have 3 machines and each machine would have use a root volume (a Cinder volume attached to the Nova server) and also an attached ephemeral disk using local storage, that would only be used by etcd.

As this feature will be TechPreview in 4.15, this will only be implemented as a day 2 operation for now. This might or might not change in the future.

We know that etcd requires storage with strong performance capabilities and currently a root volume backed by Ceph has difficulties to provide these capabilities.

By also attaching local storage to the machine and mounting it for etcd would solve the performance issues that we saw when customers were using Ceph as the backend for the control plane disks.

Gophercloud already accepts to create a server with multiple ephemeral disks:

We need to figure out how we want to address that in CAPO, probably involving a new API; that later would be used in openshift (MAPO, and probably installer).

We'll also have to update the OpenStack Failure Domain in CPMS.

ARO (Azure) has conducted some benckmarks and is now recommending to put etcd on a separated data disk:

https://docs.google.com/document/d/1O_k6_CUyiGAB_30LuJFI6Hl93oEoKQ07q1Y7N2cBJHE/edit

Also interesting thread: https://groups.google.com/u/0/a/redhat.com/g/aos-devel/c/CztJzGWdsSM/m/jsPKZHSRAwAJ

Once we have defined an API for data volumes, we'll need to add support for this new API in MAPO so the user can update their Machines on day 2 to be redeployed with etcd on local disk.

- Day 2 install is documented here (this document was originally created for QE, as a FID).

- We need to document that when using rootVolumes for the Control Plane, etcd should be placed on a local ephemeral disk and we document how.

- We also need to update https://docs.openshift.com/container-platform/4.13/scalability_and_performance/recommended-performance-scale-practices/recommended-etcd-practices.html#move-etcd-different-disk_recommended-etcd-practices with 2 adjustments: the command that is used is mkfs.xfs -f and also we use /dev/vdb.

We only allow usage of controlPlanePort as a TechPreview feature. We should move it to GA.

Open questions:

- does this implies the removal of support for machinesSubnet from the CI jobs and documentation?

Goal

- Goal is to remove Kuryr from the payload and being an SDN option.

Why is this important?

- Kuryr is deprecated, we have a migration path and dropping it means relieving a lot of resources.

Acceptance Criteria

- CI removed in 4.15.

- Images no longer part of the payload.

- Installer not accepting Kuryr as SDN option.

- Docs and release notes updated.

Since Kuryr removal we don't need to generate the trunks name anymore. They can be removed.

Kuryr is no longer supported in 4.15 and there cannot be a 4.15 cluster with Kuryr, either a new one or upgraded. Therefore we want to remove Kuryr from must-gather.

Installer should no longer accept Kuryr as NetworkType. If user choose it, Installer should show clear error about Kuryr no longer being supported.

Feature Overview (aka. Goal Summary)

As a cluster-admin I wish to see the status of update and see progress of update on each components.

Background

A common update improvements requested from customer interactions on Update experience is status command

- oc update status ?

From the UI we can see the progress of the update. From oc cli we can see this from "oc get cvo"

But the ask is to show more details in a human-readable format.

Know where the update has stopped. Consider adding at what run level it has stopped.

oc get clusterversion NAME VERSION AVAILABLE PROGRESSING SINCE STATUS version 4.12.0 True True 16s Working towards 4.12.4: 9 of 829 done (1% complete)

Documentation Considerations

Update docs for UX and CLI changes

Epic Goal*

Add a new command `oc adm upgrade status` command which is backed by an API. Please find the mock output of the command output attached in this card.

Why is this important? (mandatory)

- From the UI we can see the progress of the update. Using OC CLI we can see some of the information using "oc get clusterversion" but the output is not readable and it is a lot of extra information to process.

- Customer as asking us to show more details in a human-readable format as well provide an API which they can use for automation.

Scenarios (mandatory)

Provide details for user scenarios including actions to be performed, platform specifications, and user personas.

Dependencies (internal and external) (mandatory)

What items must be delivered by other teams/groups to enable delivery of this epic.

Contributing Teams(and contacts) (mandatory)

Our expectation is that teams would modify the list below to fit the epic. Some epics may not need all the default groups but what is included here should accurately reflect who will be involved in delivering the epic.

- Development -

- Documentation -

- QE -

- PX -

- Others -

Acceptance Criteria (optional)

Provide some (testable) examples of how we will know if we have achieved the epic goal.

Drawbacks or Risk (optional)

Reasons we should consider NOT doing this such as: limited audience for the feature, feature will be superseded by other work that is planned, resulting feature will introduce substantial administrative complexity or user confusion, etc.

Done - Checklist (mandatory)

The following points apply to all epics and are what the OpenShift team believes are the minimum set of criteria that epics should meet for us to consider them potentially shippable. We request that epic owners modify this list to reflect the work to be completed in order to produce something that is potentially shippable.

- CI Testing - Tests are merged and completing successfully

- Documentation - Content development is complete.

- QE - Test scenarios are written and executed successfully.

- Technical Enablement - Slides are complete (if requested by PLM)

- Other

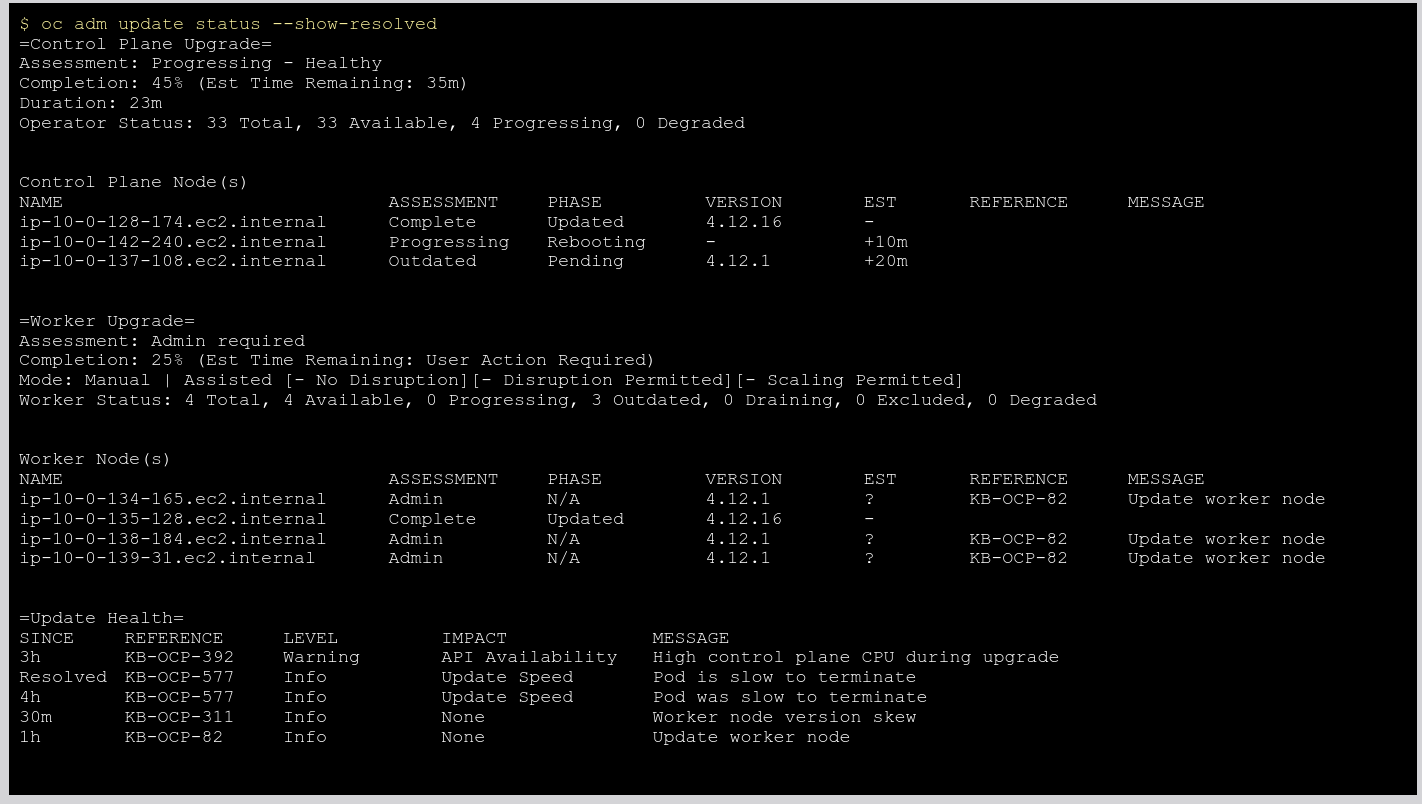

Add control plane update status to "oc adm upgrade status" command output.

Note: In future we want to add "Est Time Remaining: 35m" to this output but need a separate card.

Sample output :

=Control Plane = Assessment: Progressing - Healthy Completion: 45% Duration: 23m Operator Status: 33 Total, 33 Available, 4 Progressing, 0 Degraded

Todo:

- Add code to get similar output

- If this needs a significant code change, please create new Jira cards.

Feature Overview:

Hypershift-provisioned clusters, regardless of the cloud provider support the proposed integration for OLM-managed integration outlined in OCPBU-559 and OCPBU-560.

Goals

There is no degradation in capability or coverage of OLM-managed operators support short-lived token authentication on cluster, that are lifecycled via Hypershift.

Requirements:

- the flows in

OCPBU-559andOCPBU-560need to work unchanged on Hypershift-managed clusters - most likely this means that Hypershift needs to adopt the CloudCredentialOperator

- all operators enabled as part of

OCPBU-563, OCPBU-564,OCPBU-566and OCPBU-568 need to be able to leverage short-lived authentication on Hypershift-managed clusters without being aware that they are on Hypershift-managed clusters - also OCPBU-569 and

OCPBU-570should be achievable on Hypershift-managed clusters

Background

Currently, Hypershift lacks support for CCO.

Customer Considerations

Currently, Hypershift will be limited to deploying clusters in which the cluster core operators are leveraging short-lived token authentication exclusively.

Documentation Considerations

If we are successful, no special documentation should be needed for this.

Outcome Overview

Operators on guest clusters can take advantage of the new tokenized authentication workflow that depends on CCO.

Success Criteria

CCO is included in HyperShift and its footprint is minimal while meeting the above outcome.

Expected Results (what, how, when)

Post Completion Review – Actual Results

After completing the work (as determined by the "when" in Expected Results above), list the actual results observed / measured during Post Completion review(s).

CCO logic for managing webhooks is a) entirely separate from the core functionality of the CCO and b) requires a lot of extra RBAC. In deployment topologies like HyperShift, we don't want this additional functionality and would like to be able to cleanly turn it off and remove the excess RBAC.

This is a clone of issue CCO-388. The following is the description of the original issue:

—

Every guest cluster should have a running CCO pod with its kubeconfig attached to it.

Enchancement doc: https://github.com/openshift/enhancements/blob/master/enhancements/cloud-integration/tokenized-auth-enablement-operators-on-cloud.md

Feature Overview (aka. Goal Summary)

As cluster admin I would like to configure machinesets to allocate instances from pre-existing Capacity Reservation in Azure.

I want to create a pool of reserved resources that can be shared between clusters of different teams based on their priorities. I want this pool of resources to remain available for my company and not get allocated to another Azure customer.

Additional background on the feature for considering additional use cases

- Proposed title of this feature request

Machine API support for Azure Capacity Reservation Groups

- What is the nature and description of the request?

The customer would like to configure machinesets to allocate instances from pre-existing Capacity Reservation Groups, see Azure docs below

- Why does the customer need this? (List the business requirements here)

This would allow the customer to create a pool of reserved resources which can be shared between clusters of different priorities. Imagine a test and prod cluster where the demands of the prod cluster suddenly grow. The test cluster is scaled down freeing resources and the prod cluster is scaled up with assurances that those resources remain available, not allocated to another Azure customer.

- List any affected packages or components.

MAPI/CAPI Azure

In this use case, there's no immediate need for install time support to designate reserved capacity group for control plane resources, however we should consider whether that's desirable from a completeness standpoint. We should also consider whether or not this should be added as an attribute for the installconfig compute machinepool or whether altering generated MachineSet manifests is sufficient, this appears to be a relatively new Azure feature which may or may not see wider customer demand. This customer's primary use case is centered around scaling up and down existing clusters, however others may have different uses for this feature.

Additional background on the feature for considering additional use cases

User Story

As a developer I want to add support of capacity reservation group in openshift/machine-api-provider-azure so that azure VMs can be associated to a capacity reservation group during the VM creation.

Background

CFE-1036 adds the support of Capacity Reservation in upstream CAPZ (PR). The same support is needed to be added downstream also. Please refer the upstream PR for adding support downstream.

Steps

- Import the latest API changes from openshift/api to get the new field "CapacityReservationGroupID" into openshift/machine-api-provider-azure.

- If a value is assigned to the field then use for associated a VM to the capacity reservation group during VM creation.

Stakeholders

- Cluster Infra

- CFE

Definition of Done

- The PR should be reviewed and approved.

- Testing

- Add unit tests to validate the implementation.

As a developer I want to add the webhook validation for the "CapacityReservationGroupID" field of "AzureMachineProviderSpec" in openshift/machine-api-operator so that Azure capacity reservation can be supported.

Background

CFE-1036 adds the support of Capacity Reservation in upstream CAPZ (PR). The same support is needed to be added downstream also. Please refer the upstream PR for adding support downstream.

Slack discussion regarding the same: https://redhat-internal.slack.com/archives/CBZHF4DHC/p1713249202780119?thread_ts=1712582367.529309&cid=CBZHF4DHC_

Steps

- Add the validation for "CapacityReservationGroupID" to "AzureMachineProviderSpec"

- Add tests to validate.

Stakeholders

- Cluster Infra

- CFE

Update the vendor to update in cluster-control-plane-machine-set-operator repository for capacity reservation Changes.

Feature Overview

This feature is about reducing the complexity of the CAPI install system architecture which is needed for using the upstream Cluster API (CAPI) in place of the current implementation of the Machine API for standalone Openshift

prerequisite work Goals

Complete the design of the Cluster API (CAPI) architecture and build the core operator logic needed for Phase-1, incorporating the assets from different repositories to simplify asset management.

Background, and strategic fit

- Initially CAPI did not meet the requirements for cluster/machine management that OCP had the project has moved on, and CAPI is a better fit now and also has better community involvement.

- CAPI has much better community interaction than MAPI.

- Other projects are considering using CAPI and it would be cleaner to have one solution

- Long term it will allow us to add new features more easily in one place vs. doing this in multiple places.

Acceptance Criteria

There must be no negative effect to customers/users of the MAPI, this API must continue to be accessible to them though how it is implemented "under the covers" and if that implementation leverages CAPI is open

Epic Goal

- Rework the current flow for the installation of Cluster API components in OpenShift by addressing some of the criticalities of the current implementation

Why is this important?

- We need to reduce complexity of the CAPI install system architecture

- We need to improve the development, stability and maintainability of Standalone Cluster API on OpenShift

- We need to make Cluster

Acceptance Criteria

- CI - MUST be running successfully with tests automated

Dependencies (internal and external)

- ...

Previous Work (Optional):

Open questions::

- …

Done Checklist

- CI - CI is running, tests are automated and merged.

- Release Enablement <link to Feature Enablement Presentation>

- DEV - Upstream code and tests merged: <link to meaningful PR or GitHub Issue>

- DEV - Upstream documentation merged: <link to meaningful PR or GitHub Issue>

- DEV - Downstream build attached to advisory: <link to errata>

- QE - Test plans in Polarion: <link or reference to Polarion>

- QE - Automated tests merged: <link or reference to automated tests>

- DOC - Downstream documentation merged: <link to meaningful PR>

User Story

As an OpenShift engineer I want to be able to install the new manifest generation tool as a standalone tool in my CAPI Infra Provider repo to generate the CAPI Provider transport ConfigMap(s)

Background

Renaming of the CAPI Asset/Manifest generator from assets (generator) to manifest-gen, as it won't need to generate go embeddable assets anymore, but only manifests that will be referenced and applied by CVO

Steps

- Removal of the `/assets` folder - we are moving away from embedded assets in favour of transport ConfigMaps

- Renaming of the CAPI Asset/Manifest generator from assets (generator) to manifest-gen, as it won't need to generate go embeddable assets anymore, but only manifests that will be referenced and applied by CVO

- Removal of the cluster-api-operator specific code from the assets generator - we are moving away from using the cluster-api-operator

- Remove the assets generator specific references from the Makefiles/hack scripts - they won't be needed anymore as the tool will be referenced only from other repositories

- Adapting the new generator tool to be a standalone go module that can be installed as a tool in other repositories to generate manifests

- Make sure to add CRDs and Conversion,Validation (also Mutation?) Webhooks to the generated transport ConfigMaps

Stakeholders

- Cluster Infrastructure Team

- ShiftStack Team (CAPO)

Definition of Done

- Working and standalone installable generation tool

- https://github.com/openshift/cluster-api-provider-aws/pull/486

- https://github.com/openshift/cluster-api/pull/189

- https://github.com/openshift/cluster-capi-operator/pull/121

- https://github.com/openshift/cluster-api-provider-gcp/pull/214

- https://github.com/openshift/cluster-api-provider-ibmcloud/pull/68

User Story

- As an OpenShift engineer I want to reduce the complexity of the CAPI component installation process so that it is more easily developed and maintainable.

- As an OpenShift engineer I want a way to install CAPI providers depending on the platform the cluster is running on

Background

In order to reduce the complexity in the system we are proposing to get rid of the upstream cluster-api operator (kubernetes-sigs/cluster-api-operator). We plan to replace the responsibility of this component, which at the moment is responsible for reading, fetching and installing the desired providers in cluster, by implementing them directly in the downstream openshift/cluster-capi-operator.

Steps

- Removal of the upstream cluster-api-operator manifest/CRs generation steps from the asset/manifest generator in (https://github.com/openshift/cluster-capi-operator/tree/main/hack/assets), as this component is removed from the system

- Removal of the Cluster Operator controller (which at the moment loads and applies cluster-api-operator CRs)

- Introduction of a new controller within the downstream cluster-capi-operator, called CAPI Installer controller, which is going to be responsible for replacing the duties previously carried out by the Cluster Operator controller + cluster-api-operator, in the following way:

- detection of the current Infrastructure platform the cluster is running on

- loading of the desired CAPI provider manifests at runtime by fetching the relevant transport ConfigMaps (the core and the infrastructure specific one) containing them (there can be multiple CMs per each provider, use label selectors for fetching them, see here).

- extraction of the CAPI provider manifests (CRDs, RBACs, Deployments, Webhooks, Services, ...) from the fetched transport ConfigMaps

- injection of the templated values in such manifests (e.g. ART container images references)

- order aware direct apply of the resulting CAPI providers manifests at runtime (using library-go specific pkgs to apply them where possible)

- continuous tracking of the provider ConfigMaps and reconciliation of the applied CAPI components

- Left to do:

- Rebase changes

- Some boilerplate about creating a new controller

- Apply deployments/daemonsets in a simple way

- Apply correct environment variables to manifests

Stakeholders

- Cluster Infrastructure team

- ShiftStack team

- Hypeshift team

Definition of Done

- Currently CAPI E2E tests should still pass after this refactor

- Improved Docs

- Improved Testing

User Story

As an OpenShift engineer I want the CAPI Providers repositories to use the new generator tool so that they can independently generate CAPI Provider transport ConfigMaps

Background

Once the new CAPI manifests generator tool is ready, we want to make use of that directly from the CAPI Providers repositories so we can avoid storing the generated configuration centrally and independently apply that based on the running platform.

Steps

- Install new CAPI manifest generator as a go `tool` to all the CAPI provider repositories

- Setup a make target under the `/openshift/Makefile` to invoke the generator. Make it output the manifests under `/openshift/manifests`

- Make sure `/openshift/manifests` is mapped to `/manifests` in the openshift/Dockerfile, so that the files are later picked up by CVO

- Make sure the manifest generation works by triggering a manual generation

- Check in the newly generated transport ConfigMap + Credential Requests (to let them be applied by CVO)

Stakeholders

- <Who is interested in this/where did they request this>

Definition of Done

- CAPI manifest generator tool is installed

- Docs

- <Add docs requirements for this card>

- Testing

- <Explain testing that will be added>

- https://github.com/openshift/cluster-api-provider-aws/pull/471

- https://github.com/openshift/cluster-api/pull/179

- https://github.com/openshift/cluster-api-provider-gcp/pull/209

- https://github.com/openshift/cluster-api-provider-ibmcloud/pull/64

- https://github.com/openshift/cluster-api-provider-vsphere/pull/27

Epic Goal*

Provide a long term solution to SELinux context labeling in OCP.

Why is this important? (mandatory)

As of today when selinux is enabled, the PV's files are relabeled when attaching the PV to the pod, this can cause timeout when the PVs contains lot of files as well as overloading the storage backend.

https://access.redhat.com/solutions/6221251 provides few workarounds until the proper fix is implemented. Unfortunately these workaround are not perfect and we need a long term seamless optimised solution.

This feature tracks the long term solution where the PV FS will be mounted with the right selinux context thus avoiding to relabel every file.

Scenarios (mandatory)

Provide details for user scenarios including actions to be performed, platform specifications, and user personas.

- Apply new context when there is none

- Change context of all files/folders when changing context

- RWO & RWX PVs

- ReadWriteOncePod PVs first

- RWX PV in a second phase

As we are relying on mount context there should not be any relabeling (chcon) because all files / folders will inherit the context from the mount context

More on design & scenarios in the KEP and related epic STOR-1173

Dependencies (internal and external) (mandatory)

None for the core feature

However the driver will have to set SELinuxMountSupported to true in the CSIDriverSpec to enable this feature.

Contributing Teams(and contacts) (mandatory)

Our expectation is that teams would modify the list below to fit the epic. Some epics may not need all the default groups but what is included here should accurately reflect who will be involved in delivering the epic.

- Development - STOR

- Documentation - STOR

- QE - STOR

- PX -

- Others -

Done - Checklist (mandatory)

The following points apply to all epics and are what the OpenShift team believes are the minimum set of criteria that epics should meet for us to consider them potentially shippable. We request that epic owners modify this list to reflect the work to be completed in order to produce something that is potentially shippable.

- CI Testing - Basic e2e automationTests are merged and completing successfully

- Documentation - Content development is complete.

- QE - Test scenarios are written and executed successfully.

- Technical Enablement - Slides are complete (if requested by PLM)

- Engineering Stories Merged

- All associated work items with the Epic are closed

- Epic status should be “Release Pending”

Epic Goal

Support upstream feature "SELinux relabeling using mount options (CSIDriver API change)"" in OCP as Beta, i.e. test it and have docs for it (unless it's Alpha upstream).

Summary: If Pod has defined SELinux context (e.g. it uses "resticted" SCC) and it uses ReadWriteOncePod PVC and CSI driver responsible for the volume supports this feature, kubelet + the CSI driver will mount the volume directly with the correct SELinux labels. Therefore CRI-O does not need to recursive relabel the volume and pod startup can be significantly faster. We will need a thorough documentation for this.

This upstream epic actually will be implemented by us!

Why is this important?

- We get this upstream feature through Kubernetes rebase. We should ensure it works well in OCP and we have docs for it.

Upstream links

- Enhancement issue: [1710]

- KEP: https://github.com/kubernetes/enhancements/pull/3172

Acceptance Criteria

- CI - MUST be running successfully with tests automated

- Release Technical Enablement - Provide necessary release enablement details and documents.

- ...

Dependencies (internal and external)

- External: the feature is currently scheduled for Beta in Kubernetes 1.27, i.e. OCP 4.14, but it may change before Kubernetes 1.27 GA.

Done Checklist

- CI - CI is running, tests are automated and merged.

- Release Enablement <link to Feature Enablement Presentation>

- DEV - Upstream code and tests merged: <link to meaningful PR or GitHub Issue>

- DEV - Upstream documentation merged: <link to meaningful PR or GitHub Issue>

- DEV - Downstream build attached to advisory: <link to errata>

- QE - Test plans in Polarion: <link or reference to Polarion>

- QE - Automated tests merged: <link or reference to automated tests>

- DOC - Downstream documentation merged: <link to meaningful PR>

Add metrics described in the upstream KEP to telemetry, so we know how many clusters / Pod would be affected when we expose SELinux mount to all volume types.

We want:

- volume_manager_selinux_container_errors_total + volume_manager_selinux_container_warnings_total + volume_manager_selinux_pod_context_mismatch_errors_total + volume_manager_selinux_pod_context_mismatch_warnings_total - these show user configuration error.

- volume_manager_selinux_volume_context_mismatch_errors_total + volume_manager_selinux_volume_context_mismatch_warnings_total - these show Pods that use the same volume with different SELinux context. This will not be supported when we extend mounting with SELinux to all volume types.

As a cluster user, I want to use mounting with SELinux context without any configuration.

This means OCP ships CSIDriver objects with "SELinuxMount: true" for CSI drivers that support mounting with "-o context". I.e. all CSI drivers that are based on block volumes and use ext4/xfs should have this enabled.

- https://github.com/openshift/alibaba-disk-csi-driver-operator/pull/57

- https://github.com/openshift/azure-disk-csi-driver-operator/pull/93

- https://github.com/openshift/gcp-pd-csi-driver-operator/pull/81

- https://github.com/openshift/ibm-vpc-block-csi-driver-operator/pull/77

- https://github.com/openshift/openstack-cinder-csi-driver-operator/pull/129

- https://github.com/openshift/vmware-vsphere-csi-driver-operator/pull/165

Test that the metrics described in the KEP provide useful data. I.e. check that volume_manager_selinux_volume_context_mismatch_warnings_total increases when there are two Pods that have two different SELinux contexts and use the same volume and different subpath of it.

Feature Overview (aka. Goal Summary)

Enable Hosted Control Planes guest clusters to support up to 500 worker nodes. This enable customers to have clusters with large amount of worker nodes.

Goals (aka. expected user outcomes)

Max cluster size 250+ worker nodes (mainly about control plane). See XCMSTRAT-371 for additional information.

Service components should not be overwhelmed by additional customer workloads and should use larger cloud instances and when worker nodes are lesser than the threshold it should use smaller cloud instances.

Requirements (aka. Acceptance Criteria):

| Deployment considerations | List applicable specific needs (N/A = not applicable) |

| Self-managed, managed, or both | Managed |

| Classic (standalone cluster) | N/A |

| Hosted control planes | Yes |

| Multi node, Compact (three node), or Single node (SNO), or all | N/A |

| Connected / Restricted Network | Connected |

| Architectures, e.g. x86_x64, ARM (aarch64), IBM Power (ppc64le), and IBM Z (s390x) | x86_64 ARM |

| Operator compatibility | N/A |

| Backport needed (list applicable versions) | N/A |

| UI need (e.g. OpenShift Console, dynamic plugin, OCM) | N/A |

| Other (please specify) |

Questions to Answer (Optional):

Check with OCM and CAPI requirements to expose larger worker node count.

Documentation:

- Design document detailing the autoscaling mechanism and configuration options

- User documentation explaining how to configure and use the autoscaling feature.

Acceptance Criteria

- Configure max-node size from CAPI

- Management cluster nodes automatically scale up and down based on the hosted cluster's size.

- Scaling occurs without manual intervention.

- A set of "warm" nodes are maintained for immediate hosted cluster creation.

- Resizing nodes should not cause significant downtime for the control plane.

- Scaling operations should be efficient and have minimal impact on cluster performance.

Goal

- Dynamically scale the serving components of control planes

Why is this important?

- To be able to have clusters with large amount of worker nodes

Scenarios

- A hosted cluster amount of worker nodes increases past X amount, the serving components are moved to larger cloud instances

- A hosted cluster amount of workers falls below a threshold, the serving components are moved to smaller cloud instances.

Acceptance Criteria

- Dev - Has a valid enhancement if necessary

- CI - MUST be running successfully with tests automated

- QE - covered in Polarion test plan and tests implemented

Done Checklist

- CI - CI is running, tests are automated and merged.

- Release Technical Enablement <link to Feature Enablement Presentation>

- DEV - Upstream documentation merged: <link to meaningful PR or GitHub Issue>

- DEV - Enhancement merged: <link to meaningful PR or GitHub Issue>

- QE - Test plans in Polarion: <link or reference to Polarion>

- QE - Automated tests merged: <link or reference to automated tests>

- DOC - Downstream documentation merged: <link to meaningful PR>

User Story:

When a HostedCluster is given a size label, it needs to ensure that request serving nodes exist for that size label and when they do, reschedule request serving pods to the appropriate nodes.

Acceptance Criteria:

Description of criteria:

- HostedClusters reconcile the content of hypershift.openshift.io/request-serving-node-additional-selector label

- Reschedule the request serving pods to run to instances matching the label

![]() This does not require a design proposal.

This does not require a design proposal.

![]() This does not require a feature gate.

This does not require a feature gate.

User Story:

As a service provider, I want to be able to:

- Configure priority and fairness settings per HostedCluster size and force these settings to be applied on the resulting hosted cluster.

so that I can achieve

- Prevent user of hosted cluster from bringing down the HostedCluster kube apiserver with their workload.

Acceptance Criteria:

Description of criteria:

- HostedCluster priority and fairness settings should be configurable per cluster size in the ClusterSizingConfiguration CR

- Any changes in priority and fairness inside the HostedCluster should be prevented and overridden by whatever is configured on the provider side.

- With the proper settings, heavy use of the API from user workloads should not result in the KAS pod getting OOMKilled due to lack of resources.

![]() This requires/does not require a design proposal.

This requires/does not require a design proposal.

![]() This requires/does not require a feature gate.

This requires/does not require a feature gate.

Feature Overview

Move to using the upstream Cluster API (CAPI) in place of the current implementation of the Machine API for standalone Openshift

prerequisite work Goals completed in OCPSTRAT-1122

{}Complete the design of the Cluster API (CAPI) architecture and build the core operator logic needed for Phase-1, incorporating the assets from different repositories to simplify asset management.

Phase 1 & 2 covers implementing base functionality for CAPI.

Background, and strategic fit

- Initially CAPI did not meet the requirements for cluster/machine management that OCP had the project has moved on, and CAPI is a better fit now and also has better community involvement.

- CAPI has much better community interaction than MAPI.

- Other projects are considering using CAPI and it would be cleaner to have one solution

- Long term it will allow us to add new features more easily in one place vs. doing this in multiple places.

Acceptance Criteria

There must be no negative effect to customers/users of the MAPI, this API must continue to be accessible to them though how it is implemented "under the covers" and if that implementation leverages CAPI is open

sets up CAPI ecosystem for vSphere

So far we haven't tested this provider at all. We have to run it and spot if there are any issues with it.

Steps:

- Try to run the vSphere provider on OCP

- Try to create MachineSets

- Try various features out and note down bugs

- Create stories for resolving issues up stream and downstream

Outcome:

- Create stories in epic of items for vSphere that need to be resolved

Feature Overview (aka. Goal Summary)

Enable selective management of HostedCluster resources via annotations, allowing hypershift operators to operate concurrently on a management cluster without interfering with each other. This feature facilitates testing new operator versions or configurations in a controlled manner, ensuring that production workloads remain unaffected.

Goals (aka. expected user outcomes)

- Primary User Type/Persona: Cluster Service Providers

- Observable Functionality: Administrators can deploy "test" and "production" hypershift operators within the same management cluster. The "test" operator will manage only those HostedClusters annotated according to a predefined specification, while the "production" operator will ignore these annotated HostedClusters, thus maintaining the integrity of existing workloads.

Requirements (aka. Acceptance Criteria):

- The "test" operator must respond only to HostedClusters that carry a specific annotation defined by the HOSTEDCLUSTERS_SCOPE_ANNOTATION environment variable.

- The "production" operator must ignore HostedClusters with the specified annotation.

- The feature is activated via the ENABLE_HOSTEDCLUSTERS_ANNOTATION_SCOPING environment variable. When not set, the operators should behave as they currently do, without any annotation-based filtering.

- Upstream documentation to describe how to set up and use annotation-based scoping, including setting environment variables and annotating HostedClusters appropriately.

- The solution should not impact the core functionality of HCP for self-managed and cloud-services (ROSA/ARO)

| Deployment considerations | List applicable specific needs (N/A = not applicable) |

| Self-managed, managed, or both | |

| Classic (standalone cluster) | |

| Hosted control planes | Applicable |

| Multi node, Compact (three node), or Single node (SNO), or all | |

| Connected / Restricted Network | |

| Architectures, e.g. x86_x64, ARM (aarch64), IBM Power (ppc64le), and IBM Z (s390x) | |

| Operator compatibility | |

| Backport needed (list applicable versions) | |

| UI need (e.g. OpenShift Console, dynamic plugin, OCM) | |

| Other (please specify) |

Use Cases (Optional):

- Testing new versions or configurations of hypershift operators without impacting existing HostedClusters.

- Gradual rollout of operator updates to a subset of HostedClusters for performance and compatibility testing.

Questions to Answer (Optional):

Include a list of refinement / architectural questions that may need to be answered before coding can begin. Initial completion during Refinement status.

<your text here>

Out of Scope

- Automatic migration of HostedClusters between "test" and "production" operators.

- Non-IBM integrations (e.g., MCE)

Background

Current hypershift operator functionality does not allow for selective management of HostedClusters, limiting the ability to test new operator versions or configurations in a live environment without affecting production workloads.

Customer Considerations

Provide any additional customer-specific considerations that must be made when designing and delivering the Feature. Initial completion during Refinement status.

<your text here>

Documentation Considerations

Upstream only for now

Interoperability Considerations

- The solution should not impact the core functionality of HCP for self-managed and cloud-services (ROSA/ARO)

As a mgmt cluster admin, I want to be able to run multiple hypershift-operators that operate on a disjoint set of HostedClusters.

Elaborate more dashboards (monitoring dashboards, accessible from menu Observe > Dashboards ; admin perspective) related to networking.

Start with just a couple of areas:

- Host network dashboard (using node-exporter network / netstat metrics - related to CMO)

- OVN/OVS health dashboard (using ovn/ovs metrics)

- Ingress dashboard (routes, shards stats) related to Ingress operator / netedge team

(- DNS dashboard, if time)

More info/discussion in this work doc: https://docs.google.com/document/d/1ByNIJiOzd6w5csFYpC27NdOydnBg8Tx45uL4-7v-aCM/edit

Elaborate more dashboards (monitoring dashboards, accessible from menu Observe > Dashboards ; admin perspective) related to networking.

Start with just a couple of areas:

- Host network dashboard (using node-exporter network / netstat metrics - related to CMO)

- OVN/OVS health dashboard (using ovn/ovs metrics)

More info/discussion in this work doc: https://docs.google.com/document/d/1ByNIJiOzd6w5csFYpC27NdOydnBg8Tx45uL4-7v-aCM/edit

Martin Kennelly is our contact point from the SDN team

Create a dashboard from the CNO

Current metrics documentation:

- https://docs.google.com/document/d/1lItYV0tTt5-ivX77izb1KuzN9S8-7YgO9ndlhATaVUg/edit

- https://github.com/ovn-org/ovn-kubernetes/blob/master/docs/metrics.md

Include metrics for:

- pod/svc/netpol setup latency

- ovs/ovn CPU and memory

- network stats: rx/tx bytes, drops, errs per interface (not all interfaces are monitored by default, but they're going to be more configurable via another task:

NETOBSERV-1021)

Template:

Networking Definition of Planned

Epic Template descriptions and documentation

Epic Goal

Make limited live migration available to unmanaged cluster in 4.15.

Why is this important?

Planning Done Checklist

The following items must be completed on the Epic prior to moving the Epic from Planning to the ToDo status

Priority+ is set by engineering

Priority+ is set by engineering Epic must be Linked to a +Parent Feature

Epic must be Linked to a +Parent Feature Target version+ must be set

Target version+ must be set Assignee+ must be set

Assignee+ must be set (Enhancement Proposal is Implementable

(Enhancement Proposal is Implementable (No outstanding questions about major work breakdown

(No outstanding questions about major work breakdown (Are all Stakeholders known? Have they all been notified about this item?

(Are all Stakeholders known? Have they all been notified about this item? Does this epic affect SD? {}Have they been notified{+}? (View plan definition for current suggested assignee)

Does this epic affect SD? {}Have they been notified{+}? (View plan definition for current suggested assignee)

- Please use the “Discussion Needed: Service Delivery Architecture Overview” checkbox to facilitate the conversation with SD Architects. The SD architecture team monitors this checkbox which should then spur the conversation between SD and epic stakeholders. Once the conversation has occurred, uncheck the “Discussion Needed: Service Delivery Architecture Overview” checkbox and record the outcome of the discussion in the epic description here.

- The guidance here is that unless it is very clear that your epic doesn’t have any managed services impact, default to use the Discussion Needed checkbox to facilitate that conversation.

Additional information on each of the above items can be found here: Networking Definition of Planned

Acceptance Criteria

- CI - MUST be running successfully with tests automated

- Release Technical Enablement - Provide necessary release enablement

details and documents.

...

Dependencies (internal and external)

1.

...

Previous Work (Optional):

1. …

Open questions::

1. …

Done Checklist

- CI - CI is running, tests are automated and merged.

- Release Enablement <link to Feature Enablement Presentation>

- DEV - Upstream code and tests merged: <link to meaningful PR or GitHub Issue>

- DEV - Upstream documentation merged: <link to meaningful PR or GitHub Issue>

- DEV - Downstream build attached to advisory: <link to errata>

- QE - Test plans in Polarion: <link or reference to Polarion>

- QE - Automated tests merged: <link or reference to automated tests>

- DOC - Downstream documentation merged: <link to meaningful PR>

Template:

Networking Definition of Planned

Epic Template descriptions and documentation

Epic Goal

Make limited live migration available to unmanaged cluster in 4.15.

Why is this important?

Planning Done Checklist

The following items must be completed on the Epic prior to moving the Epic from Planning to the ToDo status

Priority+ is set by engineering

Priority+ is set by engineering Epic must be Linked to a +Parent Feature

Epic must be Linked to a +Parent Feature Target version+ must be set

Target version+ must be set Assignee+ must be set

Assignee+ must be set (Enhancement Proposal is Implementable

(Enhancement Proposal is Implementable (No outstanding questions about major work breakdown

(No outstanding questions about major work breakdown (Are all Stakeholders known? Have they all been notified about this item?

(Are all Stakeholders known? Have they all been notified about this item? Does this epic affect SD? {}Have they been notified{+}? (View plan definition for current suggested assignee)

Does this epic affect SD? {}Have they been notified{+}? (View plan definition for current suggested assignee)

- Please use the “Discussion Needed: Service Delivery Architecture Overview” checkbox to facilitate the conversation with SD Architects. The SD architecture team monitors this checkbox which should then spur the conversation between SD and epic stakeholders. Once the conversation has occurred, uncheck the “Discussion Needed: Service Delivery Architecture Overview” checkbox and record the outcome of the discussion in the epic description here.

- The guidance here is that unless it is very clear that your epic doesn’t have any managed services impact, default to use the Discussion Needed checkbox to facilitate that conversation.

Additional information on each of the above items can be found here: Networking Definition of Planned

Acceptance Criteria

- CI - MUST be running successfully with tests automated

- Release Technical Enablement - Provide necessary release enablement

details and documents.

...

Dependencies (internal and external)

1.

...

Previous Work (Optional):

1. …

Open questions::

1. …

Done Checklist

- CI - CI is running, tests are automated and merged.

- Release Enablement <link to Feature Enablement Presentation>

- DEV - Upstream code and tests merged: <link to meaningful PR or GitHub Issue>

- DEV - Upstream documentation merged: <link to meaningful PR or GitHub Issue>

- DEV - Downstream build attached to advisory: <link to errata>

- QE - Test plans in Polarion: <link or reference to Polarion>

- QE - Automated tests merged: <link or reference to automated tests>

- DOC - Downstream documentation merged: <link to meaningful PR>

CNO shall to allow users to trigger live migration on an unmanaged cluster.

Feature Overview (aka. Goal Summary)

The Agent Based installer is a clean and simple way to install new instances of OpenShift in disconnected environments, guiding the user through the questions and information needed to successfully install an OpenShift cluster. We need to bring this highly useful feature to the IBM Power and IBM zSystem architectures

Goals (aka. expected user outcomes)

Agent based installer on Power and zSystems should reflect what is available for x86 today.

Requirements (aka. Acceptance Criteria):

Able to use the agent based installer to create OpenShift clusters on Power and zSystem architectures in disconnected environments

Use Cases (Optional):

Include use case diagrams, main success scenarios, alternative flow scenarios. Initial completion during Refinement status.

Questions to Answer (Optional):

Include a list of refinement / architectural questions that may need to be answered before coding can begin. Initial completion during Refinement status.

Out of Scope

High-level list of items that are out of scope. Initial completion during Refinement status.

Background

Provide any additional context is needed to frame the feature. Initial completion during Refinement status.

Customer Considerations

Provide any additional customer-specific considerations that must be made when designing and delivering the Feature. Initial completion during Refinement status.

Documentation Considerations

Provide information that needs to be considered and planned so that documentation will meet customer needs. Initial completion during Refinement status.

Interoperability Considerations

Which other projects and versions in our portfolio does this feature impact? What interoperability test scenarios should be factored by the layered products? Initial completion during Refinement status.

Epic Goal

- The goal of this Epic is to enable Agent Based Installer for P/Z

Why is this important?

- The Agent Based installer is a research Spike item for the Multi-Arch team during the 4.12 release and later

Scenarios

1. …

Acceptance Criteria

- See "Definition of Done" below

Dependencies (internal and external)

1. …

Previous Work (Optional):

1. …

Open questions::

1. …

Done Checklist

- CI - For new features (non-enablement), existing Multi-Arch CI jobs are not broken by the Epic

- Release Enablement: <link to Feature Enablement Presentation>

- DEV - Upstream code and tests merged: <link to meaningful PR orf GitHub Issue>

- DEV - Upstream documentation merged: <link to meaningful PR or GitHub Issue>

- DEV - If the Epic is adding a new stream, downstream build attached to advisory: <link to errata>

- QE - Test plans in Test Plan tracking software (e.g. Polarion, RQM, etc.): <link or reference to the Test Plan>

- QE - Automated tests merged: <link or reference to automated tests>

- QE - QE to verify documentation when testing

- DOC - Downstream documentation merged: <link to meaningful PR>

- All the stories, tasks, sub-tasks and bugs that belong to this epic need to have been completed and indicated by a status of 'Done'.

Enable openshift-install to create agent based install ISO for power.

As the multi-arch engineer, I would like to build an environment and deploy using Agent Based installer, so that I can confirm if the feature works per spec.

Entrance Criteria

- (If there is research) research completed and proven that the feature could be done

Acceptance Criteria

- “Proof” of verification (Logs, etc.)

- If independent test code written, a link to the code added to the JIRA story

Feature Overview (aka. Goal Summary)

The OpenShift Assisted Installer is a user-friendly OpenShift installation solution for the various platforms, but focused on bare metal. This very useful functionality should be made available for the IBM zSystem platform.

Goals (aka. expected user outcomes)

Use of the OpenShift Assisted Installer to install OpenShift on an IBM zSystem

Requirements (aka. Acceptance Criteria):

Using the OpenShift Assisted Installer to install OpenShift on an IBM zSystem

Use Cases (Optional):

Include use case diagrams, main success scenarios, alternative flow scenarios. Initial completion during Refinement status.

Questions to Answer (Optional):

Include a list of refinement / architectural questions that may need to be answered before coding can begin. Initial completion during Refinement status.

Out of Scope

High-level list of items that are out of scope. Initial completion during Refinement status.

Background

Provide any additional context is needed to frame the feature. Initial completion during Refinement status.

Customer Considerations

Provide any additional customer-specific considerations that must be made when designing and delivering the Feature. Initial completion during Refinement status.

Documentation Considerations

Provide information that needs to be considered and planned so that documentation will meet customer needs. Initial completion during Refinement status.

Interoperability Considerations

Which other projects and versions in our portfolio does this feature impact? What interoperability test scenarios should be factored by the layered products? Initial completion during Refinement status.

As a multi-arch development engineer, I would like to ensure that the Assisted Installer workflow is fully functional and supported for z/VM deployments.

Acceptance Criteria

- Feature is implemented, tested, QE, documented, and technically enabled.

- Stories closed.

Description of the problem:

Beside the API there is no possibility to provide the additional kernel arguments to the coreos installer.

In case of zVM installation there are additional kernel arguments required to enable network or storage devices correctly. If not provided the node end up in a emergency shell if node is being rebooted after coreos installation.

This is an example of an API call to install a zVM node. This call need to be executed node specific and after discovery of a node:

curl https://api.openshift.com/api/assisted-install/v2/infra-envs/$

{INFRA_ENV_ID}/hosts/$1/installer-args \

-X PATCH \

-H "Authorization: Bearer ${API_TOKEN}" \

-H "Content-Type: application/json" \

-d '{{ {

"args": [

"--append-karg", "rd.neednet=1",

"--append-karg", "ip=10.14.6.4::10.14.6.1:255.255.255.0:master-1.boea3e06.lnxero1.boe:encbdd0:none",

"--append-karg", "nameserver=10.14.6.1",

"--append-karg", "ip=[fd00::4]::[fd00::1]:64::encbdd0:none",

"--append-karg", "nameserver=[fd00::1]",

"--append-karg", "zfcp.allow_lun_scan=0",

"--append-karg", "rd.znet=qeth,0.0.bdd0,0.0.bdd1,0.0.bdd2,layer2=1",

"--append-karg", "rd.zfcp=0.0.8003,0x500507630400d1e3,0x4000404700000000",{{

"--append-karg", "rd.zfcp=0.0.8103,0x500507630400d1e3,0x4000404700000000"}} ] }{}' | jq

The kernel arguments might differ between discovered nodes.

This applies to zVM only. On KVM (s390x) these additional kernel arguments are not needed.

How reproducible:

Configure a zVM node (in case of SNO) or at least 3 zVM nodes, create a new cluster by choosing a cluster option (SNO or failover) and discover the nodes.

On the UI there is no option to enter the required kernel arguments for the coreos installer.

After verification start installation. Installation failed because nodes could not be rebooted.

Steps to reproduce:

1. Configure at least one zVM node (for SNO) or three for failover accordingly.

2. Discover these nodes

3. Start installation after all validation steps are passed.

Actual results:

Installation failed because cmdline does not contain necessary kernel arguments and the first reboot is failing (nodes are booting into emergency shell).

No option to enter additional kernel arguments on UI.

Expected results: