Incomplete Features

When this image was assembled, these features were not yet completed. Therefore, only the Jira Cards included here are part of this release

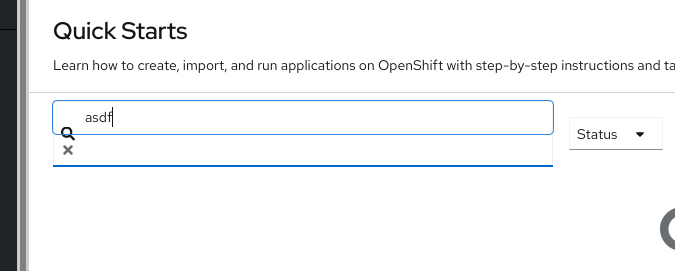

For users who are using OpenShift but have not yet begun to explore multicluster and we we offer them.

I'm investigating where Learning paths are today and what is required.

As a user I'd like to have learning path for how to get started with Multicluster.

Install MCE

Create multiple clusters

Use HyperShift

Provide access to cluster creation to devs via templates

Scale up to ACM/ACS (OPP?)

Status

https://github.com/patternfly/patternfly-quickstarts/issues/37#issuecomment-1199840223

Goal: Resources provided via the Dynamic Resource Allocation Kubernetes mechanism can be consumed by VMs.

Details: Dynamic Resource Allocation

Goal

Come up with a design of how resources provided by Dynamic Resource Allocation can be consumed by KubeVirt VMs.

Description

The Dynamic Resource Allocation (DRA) feature is an alpha API in Kubernetes 1.26, which is the base for OpenShift 4.13.

This feature provides the ability to create ResourceClaim and ResourceClasse to request access to Resources. This is similar to the dynamic provisioning of PersistentVolume via PersistentVolumeClaim and StorageClasse.

NVIDIA has been a lead contributor to the KEP and has already an initial implementation of a DRA driver and plugin, with a nice demo recording. NVIDIA is expecting to have this DRA driver available in CY23 Q3 or Q4, so likely in NVIDIA GPU Operator v23.9, around OpenShift 4.14.

When asked about the availability of MIG-backed vGPU for Kubernetes, NVIDIA said that the timeframe is not decided yet, because it will likely use DRA for the MIG devices creation and their registration with the vGPU host driver. The MIG-base vGPU feature for OpenShift Virtualization will then likely require support of DRA to request vGPU resources for the VMs.

Not having MIG-backed vGPU is a risk for OpenShift Virtualization adoption in GPU use cases, such as virtual workstations for rendering with Windows-only softwares. Customers who want to have a mix of passthrough, time-based vGPU and MIG-backed vGPU will prefer competitors who offer the full range of options. And the certification of NVIDIA solutions like NVIDIA Omniverse will be blocked, despite a great potential to increase the OpenShift consumption, as it uses RTX/A40 GPU for virtual workstations (not certified by NVIDIA on OpenShift Virtualization yet) and A100/H100 for physics simulation, both use cases probably leveraring vGPUs [7]. There's a lot of necessary conditions for that to happen and MIG-backed vGPU support is one of them.

User Stories

- GPU consumption optimization

"As an Admin, I want to let NVIDIA GPU DRA driver provision vGPUs for OpenShift Virtualization, so that it optimizes the allocation with dynamic provisioning of time or MIG backed vGPUs" - GPU mixed types per server

"As an Admin, I want to be able to mix different types of GPU to collocate different types of workloads on the same host, in order to improve multi-pod/stack performance.

Non-Requirements

- List of things not included in this epic, to alleviate any doubt raised during the grooming process.

Notes

- Any additional details or decisions made/needed

References

- Kubernetes > Dynamic Resource Allocation

- Kubernetes Enhancement Proposal - Dynamic Resource Allocation

- NVIDIA GPU DRA driver - Repository

- NVIDIA GPU DRA driver - demo recording

- NVIDIA Omniverse

- NVIDIA GPUs for Virtualization

Done Checklist

| Who | What | Reference |

|---|---|---|

| DEV | Upstream roadmap issue (or individual upstream PRs) | <link to GitHub Issue> |

| DEV | Upstream documentation merged | <link to meaningful PR> |

| DEV | gap doc updated | <name sheet and cell> |

| DEV | Upgrade consideration | <link to upgrade-related test or design doc> |

| DEV | CEE/PX summary presentation | label epic with cee-training and add a <link to your support-facing preso> |

| QE | Test plans in Polarion | <link or reference to Polarion> |

| QE | Automated tests merged | <link or reference to automated tests> |

| DOC | Downstream documentation merged | <link to meaningful PR> |

Part of making https://kubernetes.io/docs/concepts/scheduling-eviction/dynamic-resource-allocation available for early adoption.

Goal:

As an administrator, I would like to deploy OpenShift 4 clusters to AWS C2S region

Problem:

Customers were able to deploy to AWS C2S region in OCP 3.11, but our global configuration in OCP 4.1 doesn't support this.

Why is this important:

- Many of our public sector customers would like to move off 3.11 and on to 4.1, but missing support for AWS C2S region will prevent them from being able to migrate their environments..

Lifecycle Information:

- Core

Previous Work:**

Here are the relevant PRs from OCP 3.11. You can see that these endpoints are not part of the standard SDK (they use an entirely separate SDK). To support these regions the endpoints had to be configured explicitly.

- Kube: https://github.com/kubernetes/kubernetes/pull/72245

- Autoscaler: https://github.com/kubernetes/autoscaler/pull/1717

- Installer: https://github.com/openshift/openshift-ansible/pull/11277/

Seth Jennings has put together a highly customized POC.

Dependencies:

- Custom API endpoint support w/ CA

-

- Cloud / Machine API

- Image Registry

- Ingress

- Kube Controller Manager

- Cloud Credential Operator

- others?

- Require access to local/private/hidden AWS environment

Prioritized epics + deliverables (in scope / not in scope):

- Allow AWS C2S region to be specified for OpenShift cluster deployment

- Enable customers to use their own managed internal/cluster DNS solutions due to provider and operational restrictions

- Document deploying OpenShift to AWS C2S region

- Enable CI for the AWS C2S region

Related : https://jira.coreos.com/browse/CORS-1271

Estimate (XS, S, M, L, XL, XXL): L

Customers: North America Public Sector and Government Agencies

Open Questions:

Feature Overview

Plugin teams need a mechanism to extend the OCP console that is decoupled enough so they can deliver at the cadence of their projects and not be forced in to the OCP Console release timelines.

The OCP Console Dynamic Plugin Framework will enable all our plugin teams to do the following:

- Extend the Console

- Deliver UI code with their Operator

- Work in their own git Repo

- Deliver at their own cadence

Goals

-

- Operators can deliver console plugins separate from the console image and update plugins when the operator updates.

- The dynamic plugin API is similar to the static plugin API to ease migration.

- Plugins can use shared console components such as list and details page components.

- Shared components from core will be part of a well-defined plugin API.

- Plugins can use Patternfly 4 components.

- Cluster admins control what plugins are enabled.

- Misbehaving plugins should not break console.

- Existing static plugins are not affected and will continue to work as expected.

Out of Scope

-

- Initially we don't plan to make this a public API. The target use is for Red Hat operators. We might reevaluate later when dynamic plugins are more mature.

- We can't avoid breaking changes in console dependencies such as Patternfly even if we don't break the console plugin API itself. We'll need a way for plugins to declare compatibility.

- Plugins won't be sandboxed. They will have full JavaScript access to the DOM and network. Plugins won't be enabled by default, however. A cluster admin will need to enable the plugin.

- This proposal does not cover allowing plugins to contribute backend console endpoints.

Requirements

| Requirement | Notes | isMvp? |

|---|---|---|

| UI to enable and disable plugins | YES | |

| Dynamic Plugin Framework in place | YES | |

| Testing Infra up and running | YES | |

| Docs and read me for creating and testing Plugins | YES | |

| CI - MUST be running successfully with test automation | This is a requirement for ALL features. | YES |

| Release Technical Enablement | Provide necessary release enablement details and documents. | YES |

Documentation Considerations

Questions to be addressed:

- What educational or reference material (docs) is required to support this product feature? For users/admins? Other functions (security officers, etc)?

- Does this feature have doc impact?

- New Content, Updates to existing content, Release Note, or No Doc Impact

- If unsure and no Technical Writer is available, please contact Content Strategy.

- What concepts do customers need to understand to be successful in [action]?

- How do we expect customers will use the feature? For what purpose(s)?

- What reference material might a customer want/need to complete [action]?

- Is there source material that can be used as reference for the Technical Writer in writing the content? If yes, please link if available.

- What is the doc impact (New Content, Updates to existing content, or Release Note)?

Console static plugins, maintained as part of the frontend monorepo, currently use static extension types (packages/console-plugin-sdk/src/typings) which directly reference various kinds of objects, including React components and arbitrary functions.

To ease the long-term transition from static to dynamic plugins, we should support a use case where an existing static plugin goes through the following stages:

- use static extensions only (we are here)

- use both static and dynamic extensions

- use dynamic extensions only (ideal target for 4.7)

Once a static plugin reaches the "use dynamic extensions only" stage, its maintainers can move it out of the Console monorepo - the plugin becomes dynamic, shipped via the corresponding operator and loaded by Console app at runtime.

Dynamic plugins will need changes to the console operator config to be enabled and disabled. We'll need either a new CRD or an annotation on CSVs for console to discover when plugins are available.

This story tracks any API updates needed to openshift/api and any operator updates needed to wire through dynamic plugins as console config.

See https://github.com/openshift/enhancements/pull/441 for design details.

Feature Overview

- This Section:* High-Level description of the feature ie: Executive Summary

- Note: A Feature is a capability or a well defined set of functionality that delivers business value. Features can include additions or changes to existing functionality. Features can easily span multiple teams, and multiple releases.

Goals

- This Section:* Provide high-level goal statement, providing user context and expected user outcome(s) for this feature

- …

Requirements

- This Section:* A list of specific needs or objectives that a Feature must deliver to satisfy the Feature.. Some requirements will be flagged as MVP. If an MVP gets shifted, the feature shifts. If a non MVP requirement slips, it does not shift the feature.

| Requirement | Notes | isMvp? |

|---|

| CI - MUST be running successfully with test automation | This is a requirement for ALL features. | YES |

| Release Technical Enablement | Provide necessary release enablement details and documents. | YES |

(Optional) Use Cases

This Section:

- Main success scenarios - high-level user stories

- Alternate flow/scenarios - high-level user stories

- ...

Questions to answer…

- ...

Out of Scope

- …

Background, and strategic fit

This Section: What does the person writing code, testing, documenting need to know? What context can be provided to frame this feature.

Assumptions

- ...

Customer Considerations

- ...

Documentation Considerations

Questions to be addressed:

- What educational or reference material (docs) is required to support this product feature? For users/admins? Other functions (security officers, etc)?

- Does this feature have doc impact?

- New Content, Updates to existing content, Release Note, or No Doc Impact

- If unsure and no Technical Writer is available, please contact Content Strategy.

- What concepts do customers need to understand to be successful in [action]?

- How do we expect customers will use the feature? For what purpose(s)?

- What reference material might a customer want/need to complete [action]?

- Is there source material that can be used as reference for the Technical Writer in writing the content? If yes, please link if available.

- What is the doc impact (New Content, Updates to existing content, or Release Note)?

When OCP is performing cluster upgrade user should be notified about this fact.

There are two possibilities how to surface the cluster upgrade to the users:

- Display a console notification throughout OCP web UI saying that the cluster is currently under upgrade.

- Global notification throughout OCP web UI saying that the cluster is currently under upgrade.

- Have an alert firing for all the users of OCP stating the cluster is undergoing an upgrade.

AC:

- Console-operator will create a ConsoleNotification CR when the cluster is being upgraded. Once the upgrade is done console-operator will remote that CR. These are the three statuses based on which we are determining if the cluster is being upgraded.

- Add unit tests

Note: We need to decide if we want to distinguish this particular notification by a different color? ccing Ali Mobrem

Created from: https://issues.redhat.com/browse/RFE-3024

During master nodes upgrade when nodes are getting drained there's currently no protection from two or more operands going down. If your component is required to be available during upgrade or other voluntary disruptions, please consider deploying PDB to protect your operands.

The effort is tracked in https://issues.redhat.com/browse/WRKLDS-293.

Example:

Acceptance Criteria:

1. Create PDB controller in console-operator for both console and downloads pods

2. Add e2e tests for PDB in single node and multi node cluster

Note: We should consider to backport this to 4.10

The work on this story is dependent on following changes:

- Enhancement doc - https://github.com/openshift/enhancements/pull/577

- API change - https://github.com/openshift/api/pull/852

- Ingress operator changes - https://github.com/openshift/cluster-ingress-operator/pull/552

The console already supports custom routes on the operator config. With the new proposed CustomDomains API introduces a unified way how to stock install custom domains for routes, which both names and serving cert/keys, customers want to customise. From the console perspective those are:

- openshift-console / console

- openshift-console / downloads (CLI downloads)

The setup should be done on the Ingress config. There two new fields are introduced:

- ComponentRouteSpec - contains configuration of the for the custom domain(name, namespace, custom hostname, TLS secret reference)

- ComponentRouteStatus - contains status of the custom domain(condition, previous hostname, rbac needed to read the TLS secret, ...)

Console-operator will be only consuming the API and check for any changes. If a custom domain is set for either `console` or `downloads` route in the `openshift-console` namespace, console-operator will read the setup set a custom route accordingly. When a custom route will be setup for any of console's route, the default route wont be deleted, but instead it will updated so it redirects to the custom one. This is done because of two reasons:

- we want to prevent somebody from stealing the default hostname of both routes (console, downloads)

- we want to prevent users from having unusable bookmarks that are pointing to the default hostname

Console-operator will still need to support the CustomDomain API that is available on it's config.

Acceptance criteria:

- Console supports the new CustomDomains API for configuring a custom domain for both `console` and `downloads` routes

- Console falls back to the deprecated API in the console operator config if present

- Console supports the original default domains and redirects to the new ones

Questions:

- Which CustomDomain API takes precedens? Ingress config vs. Console-operator config. Can upgrade cause any issues?

Dump openshift/api godep to pickup new CustomDomain API for the Ingress config.

Implement console-operator changes to consume new CustomDomains API, based on the story details.

Feature Overview

Quick Starts are key tool for helping our customer to discover and understand how to take advantage of services that run on top of the OpenShift Platform. This feature will focus on making the Quick Starts extensible. With this Console extension our customer and partners will be able to add in their own Quick Starts to help drive a great user experience.

Enhancement PR: https://github.com/openshift/enhancements/pull/360

Goals

- Provide a supported API that our internal teams, external partners and customers can use to create Quick Starts for the OCP Console.( QUICKSTART CRD)

- Provide proper documentation and templates to make it as easy as possible to create Quick Starts

- Update the Console Operator to generate Quick Starts for enabling key services on the OCP Clusters:

- Serverless

- Pipelines

- Virtualization

- OCS

- ServiceMesh

- Process to validate Quick Starts for each release (Internal Teams Only)

- Support Internationalization

Requirements

| Requirement | Notes | isMvp? |

|---|---|---|

| Define QuickStart CRD | YES | |

| Console Operator: Out of the box support for installing Quick Starts for enabling Operators | YES | |

| Process( Design\Review), Documentation + Template for providing out of the box quick starts | YES | |

| Process, Docs, for enabling Operators to add Quick Starts | YES | |

| Migrate existing UI to work with CRD | ||

| Move Existing Quick Starts to new CRD | YES | |

| Support Internationalization | NO | |

| CI - MUST be running successfully with test automation | This is a requirement for ALL features. | YES |

| Release Technical Enablement | Provide necessary release enablement details and documents. | YES |

Documentation Considerations

Questions to be addressed:

- What educational or reference material (docs) is required to support this product feature? For users/admins? Other functions (security officers, etc)?

- Does this feature have doc impact?

- New Content, Updates to existing content, Release Note, or No Doc Impact

- If unsure and no Technical Writer is available, please contact Content Strategy.

- What concepts do customers need to understand to be successful in [action]?

- How do we expect customers will use the feature? For what purpose(s)?

- What reference material might a customer want/need to complete [action]?

- Is there source material that can be used as reference for the Technical Writer in writing the content? If yes, please link if available.

- What is the doc impact (New Content, Updates to existing content, or Release Note)?

Goal

Provide a dynamic extensible mechanism to add Guided Tours to the OCP Console.

- Format of Guided Tours (Almost like Interactive Documentation)

- Markup language

- Made up of Long Description, Steps, Links(Internal, External),

Images - Steps should be links to the page. User navigates to the next page by clicking a button

- A tour may offer a link to a secondary tour

- A tour may offer an external link to documentation, etc

- Progress indicator, indicating the number of steps in the tour

- Perspective

- Tour information to be displayed on the Cards in the landing page

- Title

- Summary (aka Short Desc)

- Image

- Approx time

- Perspective

- Indication if already completed (local storage, maybe this is punted til User Pref)

User Stories/Scenarios

As a product owner, I need a mechanism to add guide tours that will help guide my users to enable my service.

As a product owner, I need a mechanism to add guide tours that will help guide my users to consume my service.

As an Operator, I need a mechanism to add guide tours that will help guide my users to consume my service.

+Acceptance Criteria

- CI - MUST be running successfully with tests automated

- ...

Dependencies (internal and external)

- ...

Previous Work (Optional):

- …

Open questions::

- …

Done Checklist

- CI - CI is running, tests are automated and merged.

- DEV - Upstream code and tests merged: <link to meaningful PR or GitHub Issue>

- DEV - Upstream documentation merged: <link to meaningful PR or GitHub Issue>

- DEV - Downstream build attached to advisory: <link to errata>

- QE - Test plans in Polarion: <link or reference to Polarion>

- QE - Automated tests merged: <link or reference to automated tests>

- DOC - Downstream documentation merged: <link to meaningful PR>

We need a README about the submission process for adding quick starts to the console-operator repo.

As user I would like to see a YAMLSample for the new QuickStart CRD when I go and create my own QuickStarts.

Epic Goal

- ...

Why is this important?

- …

Scenarios

- ...

Acceptance Criteria

- CI - MUST be running successfully with tests automated

- Release Technical Enablement - Provide necessary release enablement details and documents.

- ...

Dependencies (internal and external)

- ...

Previous Work (Optional):

- …

Open questions::

- …

Done Checklist

- CI - CI is running, tests are automated and merged.

- Release Enablement <link to Feature Enablement Presentation>

- DEV - Upstream code and tests merged: <link to meaningful PR or GitHub Issue>

- DEV - Upstream documentation merged: <link to meaningful PR or GitHub Issue>

- DEV - Downstream build attached to advisory: <link to errata>

- QE - Test plans in Polarion: <link or reference to Polarion>

- QE - Automated tests merged: <link or reference to automated tests>

- DOC - Downstream documentation merged: <link to meaningful PR>

OCP/Telco Definition of Done

Epic Template descriptions and documentation.

<--- Cut-n-Paste the entire contents of this description into your new Epic --->

Epic Goal

- Promote vSphere control plane machinesets from tech preview to GA

Why is this important?

- …

Scenarios

Acceptance Criteria

- CI - MUST be running successfully with tests automated

- Release Technical Enablement - Provide necessary release enablement details and documents.

- Promotion PRs collectively pass payload testing

Dependencies (internal and external)

- ...

Previous Work (Optional):

- …

Open questions::

- …

Done Checklist

- CI - CI is running, tests are automated and merged.

- Release Enablement <link to Feature Enablement Presentation>

- DEV - Upstream code and tests merged: <link to meaningful PR or GitHub Issue>

- DEV - Upstream documentation merged: <link to meaningful PR or GitHub Issue>

- DEV - Downstream build attached to advisory: <link to errata>

- QE - Test plans in Polarion: <link or reference to Polarion>

- QE - Automated tests merged: <link or reference to automated tests>

- DOC - Downstream documentation merged: <link to meaningful PR>

Feature Overview (aka. Goal Summary)

Enable the OCP Console to send back user analytics to our existing endpoints in console.redhat.com. Please refer to doc for details of what we want to capture in the future:

Collect desired telemetry of user actions within OpenShift console to improve knowledge of user behavior.

Goals (aka. expected user outcomes)

OpenShift console should be able to send telemetry to a pre-configured Red Hat proxy that can be forwarded to 3rd party services for analysis.

Requirements (aka. Acceptance Criteria):

User analytics should respect the existing telemetry mechanism used to disable data being sent back

Need to update existing documentation with what we user data we track from the OCP Console: https://docs.openshift.com/container-platform/4.14/support/remote_health_monitoring/about-remote-health-monitoring.html

Capture and send desired user analytics from OpenShift console to Red Hat proxy

Red Hat proxy to forward telemetry events to appropriate Segment workspace and Amplitude destination

Use existing setting to opt out of sending telemetry: https://docs.openshift.com/container-platform/4.14/support/remote_health_monitoring/opting-out-of-remote-health-reporting.html#opting-out-remote-health-reporting

Also, allow just disabling user analytics without affecting the rest of telemetry: Add annotation to the Console to disbale just user analytics

Update docs to show this method as well.

We will require a mechanism to store all the segment values

We need to be able to pass back orgID that we receive from the OCM subscription API call

Use Cases (Optional):

Questions to Answer (Optional):

Out of Scope

Sending telemetry from OpenShift cluster nodes

Background

Console already has support for sending analytics to segment.io in Dev Sandbox and OSD environments. We should reuse this existing capability, but default to http://console.redhat.com/connections/api for analytics and http://console.redhat.com/connections/cdn to load the JavaScript in other environments. We must continue to allow Dev Sandbox and OSD clusters a way to configure their own segment key, whether telemetry is enabled, segment API host, and other options currently set as annotations on the console operator configuration resource.

Console will need a way to determine the org-id to send with telemetry events. Likely the console operator will need to read this from the cluster pull secret.

Customer Considerations

Documentation Considerations

Interoperability Considerations

Which other projects, including ROSA/OSD/ARO, and versions in our portfolio does this feature impact? What interoperability test scenarios should be factored by the layered products? Initial completion during Refinement status.

Problem:

The console telemetry plugin needs to send data to a new Red Hat ingress point that will then forward it to Segment for analysis.

Goal:

Update console telemetry plugin to send data to the appropriate ingress point.

Why is it important?

Use cases:

- <case>

Acceptance criteria:

- Update the URL to the Ingress point created for console.redhat.com

- Ensure telemetry data is flowing to the ingress point.

Dependencies (External/Internal):

Ingress point created for console.redhat.com

Design Artifacts:

Exploration:

Note:

We want to enable segment analytics by default on all (incl. self-managed) OCP clusters using a known segment API key and the console.redhat.com proxy. We'll still want to allow to honor the segment-related annotations on the console operator config for overriding these values.

Most likely the segment key should be defaulted in the console operator, otherwise we would need a separate console flag for disabling analytics. If the operator provides the key, then the console backend can use the presence of the key to determine when to enable analytics. We can likely change the segment URL and CDN default values directly in the console code, however.

ODC-7517 tracks disabling segment analytics when cluster telemetry is disabled, which is a separate change, but required for this work.

OpenShift UI Telemetry Implementation details

This three keys should have new default values:

- SEGMENT_API_KEY

- SEGMENT_API_HOST

- SEGMENT_JS_HOST OR SEGMENT_JS_URL

Defaults:

stringData: SEGMENT_API_KEY: BnuS1RP39EmLQjP21ko67oDjhbl9zpNU SEGMENT_API_HOST: console.redhat.com/connections/api/v1 SEGMENT_JS_HOST: console.redhat.com/connections/cdn

AC:

- Add default values for telemetry annotations into a CM in the openshift-console-operator NS.

- Add and update unit tests and add e2e tests

Description

As an administrator, I want to disable all telemetry on my cluster including UI analytics sent to segment.

We should honor the existing telemetry configuration so that we send no analytics when an admin opts out of telemetry. See the documentation here:

Simon Pasquier

yes this is the official supported way to disable telemetry though we also have a hidden flag in the CMO configmap that CI clusters use to disable telemetry (it depends if you want to push analytics for CI clusters).

yes this is the official supported way to disable telemetry though we also have a hidden flag in the CMO configmap that CI clusters use to disable telemetry (it depends if you want to push analytics for CI clusters).

CMO configmap is set to

data:

config.yaml: |-

telemeterClient:

enabled: false

the CMO code that reads the cloud.openshift.com token:

https://github.com/openshift/cluster-monitoring-operator/blob/b7e3f50875f2bb1fed912b23fb80a101d3a786c0/pkg/manifests/config.go#L358-L386

Acceptance Criteria

- No segment events are sent when

- Cluster is a CI cluster, which means at least one of these 2 conditions are met:

- Cluster pull secret does not have "cloud.openshift.com" credentials [1]

- Cluster monitoring config has 'telemeterClient: {enabled: false}' [2]

- Console operator config telemetry disabled annotation == true [3]

- Cluster is a CI cluster, which means at least one of these 2 conditions are met:

- Add and update unit tests and also e2e

Additional Details:

Slack discussion https://redhat-internal.slack.com/archives/C0VMT03S5/p1707753976034809

# [1] Check cluster pull secret for cloud.openshift.com creds oc get secret pull-secret -n openshift-config -o json | jq -r '.data.".dockerconfigjson"' | base64 -d | jq -r '.auths."cloud.openshift.com"' # [2] Check cluster monitoring operator config for 'telemeterClient.enabled == false' oc get configmap cluster-monitoring-config -n openshift-monitoring | jq -r '.data."config.yaml"' # [3] Check console operator config telemetry disabled annotation oc get console.operator.openshift.io cluster -o json | jq -r '.metadata.annotations."telemetry.console.openshift.io/DISABLED"'

Epic Goal

- Console should receive “organization.external_id” from the OCM Subscription API call.

- We need to store the ORG ID locally and pass it back to Segment.io to enhance the user analytics we are tracking

API the Console uses:

const apiUrl = `/api/accounts_mgmt/v1/subscriptions?page=1&search=external_cluster_id%3D%27${clusterID}%27`;

Reference: Original Console PR

Why is this important?

High Level Feature Details can be found here

Scenarios

- ...

Acceptance Criteria

- CI - MUST be running successfully with tests automated

- Release Technical Enablement - Provide necessary release enablement details and documents.

- ...

Dependencies (internal and external)

- ...

Previous Work (Optional):

- …

Open questions::

- …

Done Checklist

- CI - CI is running, tests are automated and merged.

- Release Enablement <link to Feature Enablement Presentation>

- DEV - Upstream code and tests merged: <link to meaningful PR or GitHub Issue>

- DEV - Upstream documentation merged: <link to meaningful PR or GitHub Issue>

- DEV - Downstream build attached to advisory: <link to errata>

- QE - Test plans in Polarion: <link or reference to Polarion>

- QE - Automated tests merged: <link or reference to automated tests>

- DOC - Downstream documentation merged: <link to meaningful PR>

Currently, we can get the organization ID from the OCM server by querying subscription and adding the fetchOrganization=true query parameter based on the comment.

We should be passing this ID as SERVER_FLAG.telemetry.ORGANIZATION_ID to the frontend, and as organizationId to Segment.io

Fetching should be done by the console-operator due to its RBAC permissions. Once the Organization_ID is retrieved, console operator should set it on the console-config.yaml, together with other telemetry variables.

AC:

- Update console-operator's main controller to check if the telemeter client is available on the cluster, which signalises that its a customer/pro cluster

- Consume the telemetry parameter ORG_ID and pass it as parameter to segment in the console

Feature Overview (aka. Goal Summary)

Volume Group Snapshots is a key new Kubernetes storage feature that allows multiple PVs to be grouped together and snapshotted at the same time. This enables customers to takes consistent snapshots of applications that span across multiple PVs.

This is also a key requirement for backup and DR solutions.

https://kubernetes.io/blog/2023/05/08/kubernetes-1-27-volume-group-snapshot-alpha/

https://github.com/kubernetes/enhancements/tree/master/keps/sig-storage/3476-volume-group-snapshot

Goals (aka. expected user outcomes)

Productise the volume group snapshots feature as tech preview have docs, testing as well as a feature gate to enable it in order for customers and partners to test it in advance.

Requirements (aka. Acceptance Criteria):

The feature should be graduated beta upstream to become TP in OCP. Tests and CI must pass and a feature gate should allow customers and partners to easily enable it. We should identify all OCP shipped CSI drivers that support this feature and configure them accordingly.

Use Cases (Optional):

- As a storage vendor I want to have early access to the VolumeGroupSnapshot feature to test and validate my driver support.

- As a backup vendor I want to have early access to the VolumeGroupSnapshot feature to test and validate my backup solution.

- As a customer I want early access to test the VolumeGroupSnapshot feature in order to take consistent snapshots of my workloads that are relying on multiple PVs.

Out of Scope

CSI drivers development/support of this feature.

Background

Provide any additional context is needed to frame the feature. Initial completion during Refinement status.

Customer Considerations

Drivers must support this feature and enable it. Partners may need to change their operator and/or doc to support it.

Documentation Considerations

Document how to enable the feature, what this feature does and how to use it. Update the OCP driver's table to include this capability.

Interoperability Considerations

Can be leveraged by ODF and OCP virt, especially around backup and DR scenarios.

Epic Goal*

Create an OCP feature gate that allows customers and parterns to VolumeGroupSnapshot feature while the feature is in alpha & beta upstream.

Why is this important? (mandatory)

Volume group snapshot is an important feature for ODF, OCP virt and backup partners. It requires driver support so partners need early access to the feature to confirm their driver works as expected before GA. The same applies to backup partners.

Scenarios (mandatory)

Provide details for user scenarios including actions to be performed, platform specifications, and user personas.

- As a storage vendor I want to have early access to the VolumeGroupSnapshot feature to test and validate my driver support.

- As a backup vendor I want to have early access to the VolumeGroupSnapshot feature to test and validate my backup solution.

Dependencies (internal and external) (mandatory)

This depends on the driver's support, the feature gate will enable it in the drivers that support it (OCP shipped drivers).

The feature gate should

- Configure the snapshotter to start with the right parameter to enable VolumeGroupSnapshot

- Create the necessary CRDs

- Configure the OCP shipped CSI driver

Contributing Teams(and contacts) (mandatory)

Our expectation is that teams would modify the list below to fit the epic. Some epics may not need all the default groups but what is included here should accurately reflect who will be involved in delivering the epic.

- Development - STOR

- Documentation - N/A

- QE - STOR

- PX -

- Others -

Acceptance Criteria (optional)

By enabling the feature gate partners should be able to use the VolumeGroupSnapshot API. Non OCP shipped drivers may need to be configured.

Drawbacks or Risk (optional)

Reasons we should consider NOT doing this such as: limited audience for the feature, feature will be superseded by other work that is planned, resulting feature will introduce substantial administrative complexity or user confusion, etc.

Done - Checklist (mandatory)

The following points apply to all epics and are what the OpenShift team believes are the minimum set of criteria that epics should meet for us to consider them potentially shippable. We request that epic owners modify this list to reflect the work to be completed in order to produce something that is potentially shippable.

- CI Testing - Basic e2e automationTests are merged and completing successfully

- Documentation - Content development is complete.

- QE - Test scenarios are written and executed successfully.

- Technical Enablement - Slides are complete (if requested by PLM)

- Engineering Stories Merged

- All associated work items with the Epic are closed

- Epic status should be “Release Pending”

Feature Overview

Console enhancements based on customer RFEs that improve customer user experience.

Goals

- This Section:* Provide high-level goal statement, providing user context and expected user outcome(s) for this feature

- …

Requirements

- This Section:* A list of specific needs or objectives that a Feature must deliver to satisfy the Feature.. Some requirements will be flagged as MVP. If an MVP gets shifted, the feature shifts. If a non MVP requirement slips, it does not shift the feature.

| Requirement | Notes | isMvp? |

|---|

| CI - MUST be running successfully with test automation | This is a requirement for ALL features. | YES |

| Release Technical Enablement | Provide necessary release enablement details and documents. | YES |

(Optional) Use Cases

This Section:

- Main success scenarios - high-level user stories

- Alternate flow/scenarios - high-level user stories

- ...

Questions to answer…

- ...

Out of Scope

- …

Background, and strategic fit

This Section: What does the person writing code, testing, documenting need to know? What context can be provided to frame this feature.

Assumptions

- ...

Customer Considerations

- ...

Documentation Considerations

Questions to be addressed:

- What educational or reference material (docs) is required to support this product feature? For users/admins? Other functions (security officers, etc)?

- Does this feature have doc impact?

- New Content, Updates to existing content, Release Note, or No Doc Impact

- If unsure and no Technical Writer is available, please contact Content Strategy.

- What concepts do customers need to understand to be successful in [action]?

- How do we expect customers will use the feature? For what purpose(s)?

- What reference material might a customer want/need to complete [action]?

- Is there source material that can be used as reference for the Technical Writer in writing the content? If yes, please link if available.

- What is the doc impact (New Content, Updates to existing content, or Release Note)?

Based on https://issues.redhat.com/browse/RFE-3775 we should be extending our proxy package timeout to match the browser's timeout, which is 5 minutes.

AC: Bump the 30second timeout in the proxy pkg to 5 minutes

Executive Summary

Image and artifact signing is a key part of a DevSecOps model. The Red Hat-sponsored sigstore project aims to simplify signing of cloud-native artifacts and sees increasing interest and uptake in the Kubernetes community. This document proposes to incrementally invest in OpenShift support for sigstore-style signed images and be public about it. The goal is to give customers a practical and scalable way to establish content trust. It will strengthen OpenShift’s security philosophy and value-add in the light of the recent supply chain security crisis.

CRIO

- Support customer image validation

- Support OpenShift release image validation

https://docs.google.com/document/d/12ttMgYdM6A7-IAPTza59-y2ryVG-UUHt-LYvLw4Xmq8/edit#

Feature Overview (aka. Goal Summary)

This feature will track upstream work from the OpenShift Control Plane teams - API, Auth, etcd, Workloads, and Storage.

Goals (aka. expected user outcomes)

To continue and develop meaningful contributions to the upstream community including feature delivery, bug fixes, and leadership contributions.

Note: The matchLabelKeys field is a beta-level field and enabled by default in 1.27. You can disable it by disabling the MatchLabelKeysInPodTopologySpread [feature gate](https://kubernetes.io/docs/reference/command-line-tools-reference/feature-gates/).

Removing from the TP as the feature is enabled by default.

Just a clean up work.

Upstream K8s deprecated PodSecurityPolicy and replaced it with a new built-in admission controller that enforces the Pod Security Standards (See here for the motivations for deprecation).] There is an OpenShift-specific dedicated pod admission system called Security Context Constraints. Our aim is to keep the Security Context Constraints pod admission system while also allowing users to have access to the Kubernetes Pod Security Admission.

With OpenShift 4.11, we are turned on the Pod Security Admission with global "privileged" enforcement. Additionally we set the "restricted" profile for warnings and audit. This configuration made it possible for users to opt-in their namespaces to Pod Security Admission with the per-namespace labels. We also introduced a new mechanism that automatically synchronizes the Pod Security Admission "warn" and "audit" labels.

With OpenShift 4.15, we intend to move the global configuration to enforce the "restricted" pod security profile globally. With this change, the label synchronization mechanism will also switch into a mode where it synchronizes the "enforce" Pod Security Admission label rather than the "audit" and "warn".

Epic Goal

Get Pod Security admission to be run in "restricted" mode globally by default alongside with SCC admission.

When creating a custom SCC, it is possible to assign a priority that is higher than existing SCCs. This means that any SA with access to all SCCs might use the higher priority custom SCC, and this might mutate a workload in an unexpected/unintended way.

To protect platform workloads from such an effect (which, combined with PSa, might result in rejecting the workload once we start enforcing the "restricted" profile) we must pin the required SCC to all workloads in platform namespaces (openshift-, kube-, default).

Each workload should pin the SCC with the least-privilege, except workloads in runlevel 0 namespaces that should pin the "privileged" SCC (SCC admission is not enabled on these namespaces, but we should pin an SCC for tracking purposes).

The following table tracks progress:

| namespace | in review | merged |

|---|---|---|

| openshift-apiserver-operator | |

|

| openshift-authentication | |

|

| openshift-authentication-operator | |

|

| openshift-catalogd | |

|

| openshift-cloud-controller-manager | ||

| openshift-cloud-controller-manager-operator | ||

| openshift-cloud-credential-operator | ||

| openshift-cloud-network-config-controller | ||

| openshift-cluster-csi-drivers | |

|

| openshift-cluster-machine-approver | ||

| openshift-cluster-node-tuning-operator | |

|

| openshift-cluster-olm-operator | |

|

| openshift-cluster-samples-operator | ||

| openshift-cluster-storage-operator | ||

| openshift-cluster-version | |

|

| openshift-config-operator | |

|

| openshift-console | ||

| openshift-console-operator | ||

| openshift-controller-manager | |

|

| openshift-controller-manager-operator | ||

| openshift-dns | ||

| openshift-dns-operator | ||

| openshift-etcd | ||

| openshift-etcd-operator | ||

| openshift-image-registry | ||

| openshift-ingress | ||

| openshift-ingress-canary | ||

| openshift-ingress-operator | ||

| openshift-insights | ||

| openshift-kube-apiserver | ||

| openshift-kube-apiserver-operator | ||

| openshift-kube-controller-manager | ||

| openshift-kube-controller-manager-operator | ||

| openshift-kube-scheduler | ||

| openshift-kube-scheduler-operator | ||

| openshift-kube-storage-version-migrator | |

|

| openshift-kube-storage-version-migrator-operator | |

|

| openshift-machine-api | ||

| openshift-machine-config-operator | ||

| openshift-marketplace | PR | |

| openshift-monitoring | |

|

| openshift-multus | ||

| openshift-network-diagnostics | ||

| openshift-network-node-identity | ||

| openshift-network-operator | ||

| openshift-oauth-apiserver | |

|

| openshift-operator-controller | |

|

| openshift-operator-lifecycle-manager | |

|

| openshift-ovn-kubernetes | ||

| openshift-route-controller-manager | ||

| openshift-service-ca | ||

| openshift-service-ca-operator | ||

| openshift-user-workload-monitoring | |

Feature Overview (aka. Goal Summary)

As an openshift admin ,who wants to make my OCP more secure and stable . I want to prevent anyone to schedule their workload in master node so that master node only run OCP management related workload .

Goals (aka. expected user outcomes)

secure OCP master node by preventing scheduling of customer workload in master node

Anyone applying toleration(s) in a pod spec can unintentionally tolerate master taints which protect master nodes from receiving application workload when master nodes are configured to repel application workload. An admission plugin needs to be configured to protect master nodes from this scenario. Besides the taint/toleration, users can also set spec.NodeName directly, which this plugin should also consider protecting master nodes against.

Needed so we can provide this workflow to customers following the proposal at https://github.com/openshift/enhancements/pull/1583

Reference https://issues.redhat.com/browse/WRKLDS-1015

kube-controller-manager pods are created by code residing in controllers provided by the kube-controler-manager operator. So changes are required in that repo to add a toleration to the node-role.kubernetes.io/control-plane:NoExecute taint.

Feature Overview

Console enhancements based on customer RFEs that improve customer user experience.

Goals

- This Section:* Provide high-level goal statement, providing user context and expected user outcome(s) for this feature

- …

Requirements

- This Section:* A list of specific needs or objectives that a Feature must deliver to satisfy the Feature.. Some requirements will be flagged as MVP. If an MVP gets shifted, the feature shifts. If a non MVP requirement slips, it does not shift the feature.

| Requirement | Notes | isMvp? |

|---|

| CI - MUST be running successfully with test automation | This is a requirement for ALL features. | YES |

| Release Technical Enablement | Provide necessary release enablement details and documents. | YES |

(Optional) Use Cases

This Section:

- Main success scenarios - high-level user stories

- Alternate flow/scenarios - high-level user stories

- ...

Questions to answer…

- ...

Out of Scope

- …

Background, and strategic fit

This Section: What does the person writing code, testing, documenting need to know? What context can be provided to frame this feature.

Assumptions

- ...

Customer Considerations

- ...

Documentation Considerations

Questions to be addressed:

- What educational or reference material (docs) is required to support this product feature? For users/admins? Other functions (security officers, etc)?

- Does this feature have doc impact?

- New Content, Updates to existing content, Release Note, or No Doc Impact

- If unsure and no Technical Writer is available, please contact Content Strategy.

- What concepts do customers need to understand to be successful in [action]?

- How do we expect customers will use the feature? For what purpose(s)?

- What reference material might a customer want/need to complete [action]?

- Is there source material that can be used as reference for the Technical Writer in writing the content? If yes, please link if available.

- What is the doc impact (New Content, Updates to existing content, or Release Note)?

Based on the this old feature request

https://issues.redhat.com/browse/RFE-1530

we do have impersonation in place for gaining access to other user's permissions via the console. But the only documentation we currently have is how to impersonate system:admin via the CLI see

https://docs.openshift.com/container-platform/4.14/authentication/impersonating-system-admin.html

Please provide documentation for the console feature and the required prerequisites for the users/groups accordingly.

AC:

- Create a ConsoleQuickStart CR that would help user with impersonate access understand the impersonation workflow and functionality

- This quickstart should be available only to user with impersonate

- Created CR should be placed in the console-operator's repo, where the default quickstarts are placed

More info on the impersonate access role - https://github.com/openshift/console/pull/13345/files

Feature Overview (aka. Goal Summary)

When the internal oauth-server and oauth-apiserver are removed and replaced with an external OIDC issuer (like azure AD), the console must work for human users of the external OIDC issuer.

Goals (aka. expected user outcomes)

An end user can use the openshift console without a notable difference in experience. This must eventually work on both hypershift and standalone, but hypershift is the first priority if it impacts delivery

Requirements (aka. Acceptance Criteria):

- User can log in and use the console

- User can get a kubeconfig that functions on the CLI with matching oc

- Both of those work on hypershift

- both of those work on standalone.

Use Cases (Optional):

Include use case diagrams, main success scenarios, alternative flow scenarios. Initial completion during Refinement status.

Questions to Answer (Optional):

Include a list of refinement / architectural questions that may need to be answered before coding can begin. Initial completion during Refinement status.

Out of Scope

High-level list of items that are out of scope. Initial completion during Refinement status.

Background

Provide any additional context is needed to frame the feature. Initial completion during Refinement status.

Customer Considerations

Provide any additional customer-specific considerations that must be made when designing and delivering the Feature. Initial completion during Refinement status.

Documentation Considerations

Provide information that needs to be considered and planned so that documentation will meet customer needs. If the feature extends existing functionality, provide a link to its current documentation. Initial completion during Refinement status.

Interoperability Considerations

Which other projects, including ROSA/OSD/ARO, and versions in our portfolio does this feature impact? What interoperability test scenarios should be factored by the layered products? Initial completion during Refinement status.

OCP/Telco Definition of Done

Epic Template descriptions and documentation.

<--- Cut-n-Paste the entire contents of this description into your new Epic --->

Epic Goal

- When the oauthclient API is not present, the operator must stop creating the oauthclient

Why is this important?

- This is preventing the operator from creating its deployment

Scenarios

- ...

Acceptance Criteria

- CI - MUST be running successfully with tests automated

- Release Technical Enablement - Provide necessary release enablement details and documents.

- ...

Dependencies (internal and external)

- ...

Previous Work (Optional):

- …

Open questions::

- …

Done Checklist

- CI - CI is running, tests are automated and merged.

- Release Enablement <link to Feature Enablement Presentation>

- DEV - Upstream code and tests merged: <link to meaningful PR or GitHub Issue>

- DEV - Upstream documentation merged: <link to meaningful PR or GitHub Issue>

- DEV - Downstream build attached to advisory: <link to errata>

- QE - Test plans in Polarion: <link or reference to Polarion>

- QE - Automated tests merged: <link or reference to automated tests>

- DOC - Downstream documentation merged: <link to meaningful PR>

Console needs to be able to auth agains external OIDC IDP. For that console-operator need to set configure it in that order.

AC:

- bump console-operator API pkg

- sync oauth client secret from OIDC configuration for auth type OIDC

- add OIDC config to the console configmap

- add auth server CA to the deployment annotations and volumes when auth type OIDC

- consume OIDC configuration in the console configmap and deployment

- fix roles for oauthclients and authentications watching

OCP/Telco Definition of Done

Epic Template descriptions and documentation.

<--- Cut-n-Paste the entire contents of this description into your new Epic --->

Epic Goal

- When installed with external OIDC, the clientID and clientSecret need to be configurable to match the external (and unmanaged) OIDC server

Why is this important?

- Without a configurable clientID and secret, I don't think the console can identify the user.

- There must be a mechanism to do this on both hypershift and openshift, though the API may be very similar.

Scenarios

- ...

Acceptance Criteria

- CI - MUST be running successfully with tests automated

- Release Technical Enablement - Provide necessary release enablement details and documents.

- ...

Dependencies (internal and external)

- ...

Previous Work (Optional):

- …

Open questions::

- …

Done Checklist

- CI - CI is running, tests are automated and merged.

- Release Enablement <link to Feature Enablement Presentation>

- DEV - Upstream code and tests merged: <link to meaningful PR or GitHub Issue>

- DEV - Upstream documentation merged: <link to meaningful PR or GitHub Issue>

- DEV - Downstream build attached to advisory: <link to errata>

- QE - Test plans in Polarion: <link or reference to Polarion>

- QE - Automated tests merged: <link or reference to automated tests>

- DOC - Downstream documentation merged: <link to meaningful PR>

OCP/Telco Definition of Done

Epic Template descriptions and documentation.

<--- Cut-n-Paste the entire contents of this description into your new Epic --->

Epic Goal

- Goal of this epic is to capture all the amount of required work and efforts that take to update the openshift control plane with the upstream kubernetes v1.29

Why is this important?

- Rebase is a must process for every ocp release to leverage all the new features implemented upstream

Scenarios

- ...

Acceptance Criteria

- CI - MUST be running successfully with tests automated

- Release Technical Enablement - Provide necessary release enablement details and documents.

- ...

Dependencies (internal and external)

- ...

Previous Work (Optional):

- Following epic captured the previous rebase work of k8s v1.28

https://issues.redhat.com/browse/STOR-1425

Open questions::

- …

Done Checklist

- CI - CI is running, tests are automated and merged.

- Release Enablement <link to Feature Enablement Presentation>

- DEV - Upstream code and tests merged: <link to meaningful PR or GitHub Issue>

- DEV - Upstream documentation merged: <link to meaningful PR or GitHub Issue>

- DEV - Downstream build attached to advisory: <link to errata>

- QE - Test plans in Polarion: <link or reference to Polarion>

- QE - Automated tests merged: <link or reference to automated tests>

- DOC - Downstream documentation merged: <link to meaningful PR>

Bump the following libraries in an order with the latest kube and the dependent libraries

- openshift/api

- openshift/client-go

- openshift/library-go

- openshift/apiserver-library-go

- kube-openapi (if required)

Prev Ref:

https://github.com/openshift/api/pull/1534

https://github.com/openshift/client-go/pull/250

https://github.com/openshift/library-go/pull/1557

https://github.com/openshift/apiserver-library-go/pull/118

Problem statement

DPDK applications require dedicated CPUs, and isolated any preemption (other processes, kernel threads, interrupts), and this can be achieved with the “static” policy of the CPU manager: the container resources need to include an integer number of CPUs of equal value in “limits” and “request”. For instance, to get six exclusive CPUs:

spec:

containers:

- name: CNF

image: myCNF

resources:

limits:

cpu: "6"

requests:

cpu: "6"

The six CPUs are dedicated to that container, however non trivial, meaning real DPDK applications do not use all of those CPUs as there is always at least one of the CPU running a slow-path, processing configuration, printing logs (among DPDK coding rules: no syscall in PMD threads, or you are in trouble). Even the DPDK PMD drivers and core libraries include pthreads which are intended to sleep, they are infrastructure pthreads processing link change interrupts for instance.

Can we envision going with two processes, one with isolated cores, one with the slow-path ones, so we can have two containers? Unfortunately no: going in a multi-process design, where only dedicated pthreads would run on a process is not an option as DPDK multi-process is going deprecated upstream and has never picked up as it never properly worked. Fixing it and changing DPDK architecture to systematically have two processes is absolutely not possible within a year, and would require all DPDK applications to be re-written. Knowing that the first and current multi-process implementation is a failure, nothing guarantees that a second one would be successful.

The slow-path CPUs are only consuming a fraction of a real CPU and can safely be run on the “shared” CPU pool of the CPU Manager, however containers specifications do not accept to request two kinds of CPUs, for instance:

spec:

containers:

- name: CNF

image: myCNF

resources:

limits:

cpu_dedicated: "4"

cpu_shared: "20m"

requests:

cpu_dedicated: "4"

cpu_shared: "20m"

Why do we care about allocating one extra CPU per container?

- Allocating one extra CPU means allocating an additional physical core, as the CPUs running DPDK application should run on a dedicated physical core, in order to get maximum and deterministic performances, as caches and CPU units are shared between the two hyperthreads.

- CNFs are built with a minimum of CPUs per container. This is still between 10 and 20, sometime more, today, but the intent is to decrease this number of CPU and increase the number of containers as this is the “cloud native” way to waste resources by having too large containers to schedule, like in the VNF days (tetris effect)

Let’s take a realistic example, based on a real RAN CNF: running 6 containers with dedicated CPUs on a worker node, with a slow Path requiring 0.1 CPUs means that we waste 5 CPUs, meaning 3 physical cores. With real life numbers:

- For a single datacenter composed of 100 nodes, we waste 300 physical cores

- For a single datacenter composed of 500 nodes, we waste 1500 physical cores

- For a single node OpenShift deployed on 1 Millions of nodes, we waste 3 Millions of physical cores

Intel public CPU price per core is around 150 US$, not even taking into account the ecological aspect of the waste of (rare) materials and the electricity and cooling…

Goals

- Implement an equivalent of Nokia CPU pooler, meaning a way to allocate dedicated and shared CPUs to a given container, and provide a way within the container to know which CPUs belong to which pool, so the CNF running in the container can properly pin its pthreads on the available CPUs.

Requirements

- This Section:* A list of specific needs or objectives that a Feature must deliver to satisfy the Feature.. Some requirements will be flagged as MVP. If an MVP gets shifted, the feature shifts. If a non MVP requirement slips, it does not shift the feature.

| Requirement | Notes | isMvp? |

| CI - MUST be running successfully with test automation | This is a requirement for ALL features. | YES |

| Release Technical Enablement | Provide necessary release enablement details and documents. | YES |

Questions to answer…

- Would an implementation based on annotations be possible rather than an implementation requiring a container (so pod) definition change, like the CPU pooler does?

Out of Scope

- …

Background, and strategic fit

This issue has been addressed lately by OpenStack.

Assumptions

- ...

Customer Considerations

- ...

Documentation Considerations

- The feature needs documentation on how to configure OCP, create pods, and troubleshoot

Epic Goal

- An NRI plugin that invoked by CRI-O right before the container creation, and updates the container's cpuset and quota to match the mixed-cpus request.

- The cpu pinning reconciliation operation must also execute the NRI API call on every update (so we can intercept kubelet and it does not destroy our changes)

- Dev Preview for 4.15

Why is this important?

- This would unblock lots of options including mixed cpu workloads where some CPUs could be shared among containers / pods

CNF-3706 - This would also allow further research on dynamic (simulated) hyper threading

CNF-3743

Scenarios

- ...

Acceptance Criteria

- Have an NRI plugin which called by the runtime and updates the container with mutual cpus.

- The plugin must be able to override CPU manager conciliation loop and immune to future CPU manager changes.

- The plugin must be robust and handle node reboot/kubelet/crio restart scenarios

- upstream CI - MUST be running successfully with tests automated.

- Release Technical Enablement - Provide necessary release enablement details and documents.

- OCP adoption in relevant OCP version

- NTO shall be able to deploy the new plugin

Dependencies (internal and external)

- ...

Previous Work (Optional):

- https://issues.redhat.com/browse/CNF-3706 : Spike - mix of shared and pinned/dedicated cpus within a container

- https://issues.redhat.com/browse/CNF-3743 : Spike: Dynamic offlining of cpu siblings to simulate no-smt

- upstream Node Resource Interface project - https://github.com/containerd/nri

- https://issues.redhat.com/browse/CNF-6082: [SPIKE] Cpus assigned hook point in CRI-O

- https://issues.redhat.com/browse/CNF-7603

Open questions::

N/A

Done Checklist

- CI - CI is running, tests are automated and merged.

- Release Enablement <link to Feature Enablement Presentation>

- DEV - Upstream code and tests merged: <link to meaningful PR or GitHub Issue>

- DEV - Upstream documentation merged: <link to meaningful PR or GitHub Issue>

- DEV - Downstream build attached to advisory: <link to errata>

- QE - Test plans in Polarion: <link or reference to Polarion>

- QE - Automated tests merged: <link or reference to automated tests>

- DOC - Downstream documentation merged: <link to meaningful PR>

bump cluster-config-operator to pull mixed-cpus feature-gate api

Feature Overview

Telecommunications providers continue to deploy OpenShift at the Far Edge. The acceleration of this adoption and the nature of existing Telecommunication infrastructure and processes drive the need to improve OpenShift provisioning speed at the Far Edge site and the simplicity of preparation and deployment of Far Edge clusters, at scale.

Goals

- Simplicity The folks preparing and installing OpenShift clusters (typically SNO) at the Far Edge range in technical expertise from technician to barista. The preparation and installation phases need to be reduced to a human-readable script that can be utilized by a variety of non-technical operators. There should be as few steps as possible in both the preparation and installation phases.

- Minimize Deployment Time A telecommunications provider technician or brick-and-mortar employee who is installing an OpenShift cluster, at the Far Edge site, needs to be able to do it quickly. The technician has to wait for the node to become in-service (CaaS and CNF provisioned and running) before they can move on to installing another cluster at a different site. The brick-and-mortar employee has other job functions to fulfill and can't stare at the server for 2 hours. The install time at the far edge site should be in the order of minutes, ideally less than 20m.

- Utilize Telco Facilities Telecommunication providers have existing Service Depots where they currently prepare SW/HW prior to shipping servers to Far Edge sites. They have asked RH to provide a simple method to pre-install OCP onto servers in these facilities. They want to do parallelized batch installation to a set of servers so that they can put these servers into a pool from which any server can be shipped to any site. They also would like to validate and update servers in these pre-installed server pools, as needed.

- Validation before Shipment Telecommunications Providers incur a large cost if forced to manage software failures at the Far Edge due to the scale and physical disparate nature of the use case. They want to be able to validate the OCP and CNF software before taking the server to the Far Edge site as a last minute sanity check before shipping the platform to the Far Edge site.

- IPSec Support at Cluster Boot Some far edge deployments occur on an insecure network and for that reason access to the host’s BMC is not allowed, additionally an IPSec tunnel must be established before any traffic leaves the cluster once its at the Far Edge site. It is not possible to enable IPSec on the BMC NIC and therefore even OpenShift has booted the BMC is still not accessible.

Requirements

- Factory Depot: Install OCP with minimal steps

- Telecommunications Providers don't want an installation experience, just pick a version and hit enter to install

- Configuration w/ DU Profile (PTP, SR-IOV, see telco engineering for details) as well as customer-specific addons (Ignition Overrides, MachineConfig, and other operators: ODF, FEC SR-IOV, for example)

- The installation cannot increase in-service OCP compute budget (don't install anything other that what is needed for DU)

- Provide ability to validate previously installed OCP nodes

- Provide ability to update previously installed OCP nodes

- 100 parallel installations at Service Depot

- Far Edge: Deploy OCP with minimal steps

- Provide site specific information via usb/file mount or simple interface

- Minimize time spent at far edge site by technician/barista/installer

- Register with desired RHACM Hub cluster for ongoing LCM

- Minimal ongoing maintenance of solution

- Some, but not all telco operators, do not want to install and maintain an OCP / ACM cluster at Service Depot

- The current IPSec solution requires a libreswan container to run on the host so that all N/S OCP traffic is encrypted. With the current IPSec solution this feature would need to support provisioning host-based containers.

A list of specific needs or objectives that a Feature must deliver to satisfy the Feature. Some requirements will be flagged as MVP. If an MVP gets shifted, the feature shifts. If a non MVP requirement slips, it does not shift the feature.

| requirement | Notes | isMvp? |

Describe Use Cases (if needed)

Telecommunications Service Provider Technicians will be rolling out OCP w/ a vDU configuration to new Far Edge sites, at scale. They will be working from a service depot where they will pre-install/pre-image a set of Far Edge servers to be deployed at a later date. When ready for deployment, a technician will take one of these generic-OCP servers to a Far Edge site, enter the site specific information, wait for confirmation that the vDU is in-service/online, and then move on to deploy another server to a different Far Edge site.

Retail employees in brick-and-mortar stores will install SNO servers and it needs to be as simple as possible. The servers will likely be shipped to the retail store, cabled and powered by a retail employee and the site-specific information needs to be provided to the system in the simplest way possible, ideally without any action from the retail employee.

Out of Scope

Q: how challenging will it be to support multi-node clusters with this feature?

Background, and strategic fit

< What does the person writing code, testing, documenting need to know? >

Assumptions

< Are there assumptions being made regarding prerequisites and dependencies?>

< Are there assumptions about hardware, software or people resources?>

Customer Considerations

< Are there specific customer environments that need to be considered (such as working with existing h/w and software)?>

< Are there Upgrade considerations that customers need to account for or that the feature should address on behalf of the customer?>

<Does the Feature introduce data that could be gathered and used for Insights purposes?>

Documentation Considerations

< What educational or reference material (docs) is required to support this product feature? For users/admins? Other functions (security officers, etc)? >

< What does success look like?>

< Does this feature have doc impact? Possible values are: New Content, Updates to existing content, Release Note, or No Doc Impact>

< If unsure and no Technical Writer is available, please contact Content Strategy. If yes, complete the following.>

- <What concepts do customers need to understand to be successful in [action]?>

- <How do we expect customers will use the feature? For what purpose(s)?>

- <What reference material might a customer want/need to complete [action]?>

- <Is there source material that can be used as reference for the Technical Writer in writing the content? If yes, please link if available. >

- <What is the doc impact (New Content, Updates to existing content, or Release Note)?>

Interoperability Considerations

< Which other products and versions in our portfolio does this feature impact?>

< What interoperability test scenarios should be factored by the layered product(s)?>

Questions

| Question | Outcome |

Epic Goal

- Install SNO within 10 minutes

Why is this important?

- SNO installation takes around 40+ minutes.

- This makes SNO less appealing when compared to k3s/microshift.

- We should analyze the SNO installation, figure our why it takes so long and come up with ways to optimize it

Scenarios

- ...

Acceptance Criteria

- CI - MUST be running successfully with tests automated

- Release Technical Enablement - Provide necessary release enablement details and documents.

- ...

Dependencies (internal and external)

- ...

Previous Work (Optional):

- …

Open questions::

Done Checklist

- CI - CI is running, tests are automated and merged.

- Release Enablement <link to Feature Enablement Presentation>

- DEV - Upstream code and tests merged: <link to meaningful PR or GitHub Issue>

- DEV - Upstream documentation merged: <link to meaningful PR or GitHub Issue>

- DEV - Downstream build attached to advisory: <link to errata>

- QE - Test plans in Polarion: <link or reference to Polarion>

- QE - Automated tests merged: <link or reference to automated tests>

- DOC - Downstream documentation merged: <link to meaningful PR>

- I'm not sure this is a CVO issue, but I think CVO is the one creating the namespace, CVO also renders some manifests during bootkube so it seems like the right component.

Description of problem:

The bootkube scripts spend ~1 minute failing to apply manifests while waiting fot eh openshift-config namespace to get created

Version-Release number of selected component (if applicable):

4.12

How reproducible:

100%

Steps to Reproduce:

1.Run the POC using the makefile here https://github.com/eranco74/bootstrap-in-place-poc 2. Observe the bootkube logs (pre-reboot)

Actual results:

Jan 12 17:37:09 master1 cluster-bootstrap[5156]: Failed to create "0000_00_cluster-version-operator_01_adminack_configmap.yaml" configmaps.v1./admin-acks -n openshift-config: namespaces "openshift-config" not found .... Jan 12 17:38:27 master1 cluster-bootstrap[5156]: "secret-initial-kube-controller-manager-service-account-private-key.yaml": failed to create secrets.v1./initial-service-account-private-key -n openshift-config: namespaces "openshift-config" not found Here are the logs from another installation showing that it's not 1 or 2 manifests that require this namespace to get created earlier: Jan 12 17:38:10 master1 bootkube.sh[5121]: "etcd-ca-bundle-configmap.yaml": failed to create configmaps.v1./etcd-ca-bundle -n openshift-config: namespaces "openshift-config" not found Jan 12 17:38:10 master1 bootkube.sh[5121]: "etcd-client-secret.yaml": failed to create secrets.v1./etcd-client -n openshift-config: namespaces "openshift-config" not found Jan 12 17:38:10 master1 bootkube.sh[5121]: "etcd-metric-client-secret.yaml": failed to create secrets.v1./etcd-metric-client -n openshift-config: namespaces "openshift-config" not found Jan 12 17:38:10 master1 bootkube.sh[5121]: "etcd-metric-serving-ca-configmap.yaml": failed to create configmaps.v1./etcd-metric-serving-ca -n openshift-config: namespaces "openshift-config" not found Jan 12 17:38:10 master1 bootkube.sh[5121]: "etcd-metric-signer-secret.yaml": failed to create secrets.v1./etcd-metric-signer -n openshift-config: namespaces "openshift-config" not found Jan 12 17:38:10 master1 bootkube.sh[5121]: "etcd-serving-ca-configmap.yaml": failed to create configmaps.v1./etcd-serving-ca -n openshift-config: namespaces "openshift-config" not found Jan 12 17:38:10 master1 bootkube.sh[5121]: "etcd-signer-secret.yaml": failed to create secrets.v1./etcd-signer -n openshift-config: namespaces "openshift-config" not found Jan 12 17:38:10 master1 bootkube.sh[5121]: "kube-apiserver-serving-ca-configmap.yaml": failed to create configmaps.v1./initial-kube-apiserver-server-ca -n openshift-config: namespaces "openshift-config" not found Jan 12 17:38:10 master1 bootkube.sh[5121]: "openshift-config-secret-pull-secret.yaml": failed to create secrets.v1./pull-secret -n openshift-config: namespaces "openshift-config" not found Jan 12 17:38:10 master1 bootkube.sh[5121]: "openshift-install-manifests.yaml": failed to create configmaps.v1./openshift-install-manifests -n openshift-config: namespaces "openshift-config" not found Jan 12 17:38:10 master1 bootkube.sh[5121]: "secret-initial-kube-controller-manager-service-account-private-key.yaml": failed to create secrets.v1./initial-service-account-private-key -n openshift-config: namespaces "openshift-config" not found

Expected results:

expected resources to get created successfully without having to wait for the namespace to get created.

Additional info:

openshift- service-ca service-ca pod takes a few minutes to start when installing SNO